Volume 179

Published on August 2025Volume title: Proceedings of CONF-MLA 2025 Symposium: Intelligent Systems and Automation: AI Models, IoT, and Robotic Algorithms

The successful deployment of intelligent robotic systems in the real world is often hampered by the “sim-to-real” gap, the discrepancy between simulated training environments and the complexities of reality. This gap arises from imperfect modeling of physics, rendering artifacts, and sensor noise, leading to policies trained in simulation failing to generalize. Domain adaptation techniques aim to bridge this gap, and recently, diffusion models have emerged as a powerful new paradigm for this task. This survey provides a comprehensive overview and contextual analysis of the application of diffusion models for domain adaptation in robotics. The paper begins by introducing the fundamental concepts of the sim-to-real gap and tracing the evolution of adaptation techniques, from domain randomization and adversarial methods to the current state of the art. The paper then presents a literature survey of recent works, categorizing them by their application in key robotics domains. Following this, a focused and in-depth case study provides a detailed walk-through of specific, influential methods, situating them within the landscape of prior work to highlight their core innovations. This survey then delves into a multifaceted discussion of the current challenges and open problems, including the critical trade-offs between computational efficiency and real-time performance, the debate surrounding generalization versus memorization, and the paramount issues of safety and reliability. The survey concludes by summarizing the state of the art and offering a perspective on the future directions of this rapidly evolving field, which is fundamentally reshaping how the industry approaches robust robotic learning.

View pdf

View pdf

Lane detection serves as a cornerstone task in autonomous driving systems, as it directly impacts the vehicle’s ability to maintain lane discipline, ensure safety, and perform accurate path planning. Although U-Net-based deep learning models have demonstrated strong potential for automatic lane segmentation, their performance can degrade significantly under complex real-world conditions such as variable lighting, occlusions, and worn or curved lane markings.To address these limitations, this study proposes an enhanced lane detection framework built upon the U-Net architecture. The proposed model integrates three key improvements: (1) advanced data augmentation techniques to increase the diversity and robustness of the training data, (2) a refined loss function combining PolyLoss and contrastive loss to address foreground-background imbalance and enhance structural learning, and (3) an optimized upsampling strategy designed to better preserve spatial details and lane continuity in the output predictions.Extensive experiments conducted on the TuSimple lane detection benchmark validate the effectiveness of our approach. The enhanced model achieves an Intersection over Union (IoU) of 44.49%, significantly surpassing the baseline U-Net’s performance of 40.36%. These results confirm that the proposed modifications not only improve segmentation accuracy but also enhance the model’s robustness and generalization capability in real-world driving scenarios. Overall, this work contributes practical insights and techniques that can facilitate the deployment of lane detection systems in intelligent transportation and autonomous vehicle platforms.

View pdf

View pdf

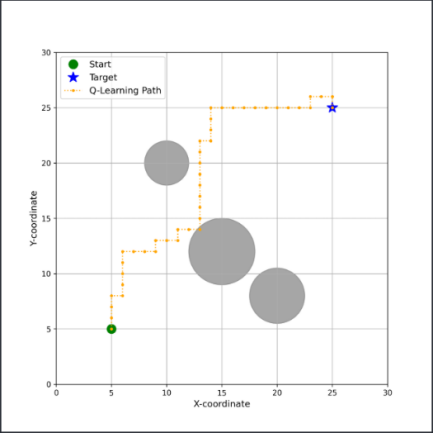

UAVs are widely used in areas such as monitoring, delivery, and disaster rescue due to their ability to work in harsh environments. Classic UAV path planning algorithms rely on pre-known accurate environment maps. How can a UAV quickly learn appropriate ways under unknown real conditions? This poses a crucial research problem for path planning of unmanned aircraft vehicles in reality. Autonomous autonomous path planning technology has become more important because of the ever-increasing application occasions of unmanned aerial vehicles. As opposed to other navigation technologies, Reinforcement Learning(RL) provides drones with learning skills to master how to navigate using only interactions with an area, not maps or 3D models of that region. Therefore, this paper first makes a survey and analysis of Reinforcement Learning in UAV path planning and then summarizes key advantages and present drawbacks of typical RL methods for navigating autonomous agents between waypoints, ranging from Q-learning techniques up to modern methods such as A3C and HRL. At last, it concludes smooth-policy-achieved advantage-function-algorithms are proper for constructing good smooth motion plans in continuous-state spaces, where multi-layer hierarchy architecture will also provide reasonable options but mainly at larger scale instances, thereby directing next-stage research activities towards the optimization of the automated movement system in the unmanned plane in favor of a broader development into more wise flight control agents.

View pdf

View pdf

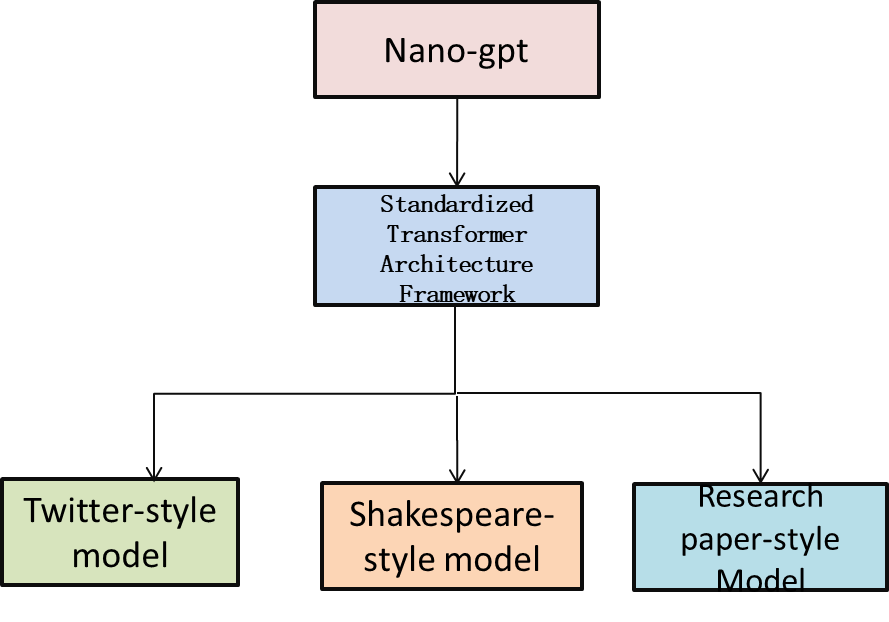

To address the growing demand for efficient natural language processing capabilities on resource-constrained edge devices, lightweight transformer architectures like Nano-GPT have emerged as essential solutions. However, their operational efficiency is profoundly influenced by the domain characteristics of their training data. This comprehensive investigation employs Nano-GPT progressively trained on three distinct datasets—Twitter conversations, scientific publications, and Shakespearean literature—identifying optimal validation loss at 20,000 training iterations while demonstrating peak text generation performance. Given significant variations in convergence patterns across domains and practical constraints in edge deployment scenarios, we standardized the evaluation framework at 5,000 iterations for consistent preliminary assessment. Through meticulously designed cloud-based experiments under rigorously controlled conditions—where data domain served as the sole independent variable—we quantitatively measured domain-specific impacts on three critical deployment metrics: inference latency, memory footprint, and energy consumption per operation. Our empirical findings conclusively demonstrate that data domain characteristics fundamentally determine compact models' real-world deployment efficiency, establishing a critical correlation between linguistic properties and computational resource requirements. These insights provide actionable guidance for selecting domain-appropriate models and optimizing architecture configurations in edge intelligence applications, particularly for IoT devices with stringent power and computational constraints.

View pdf

View pdf

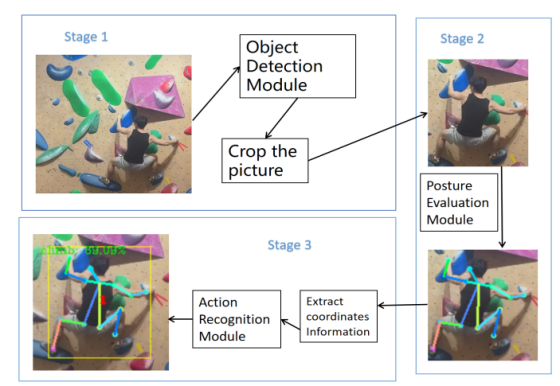

Identification of dangerous human actions is of vital importance for safety monitoring. In response to the limitations of traditional methods, this study has developed an efficient pedestrian dangerous behavior recognition system, aiming to enhance monitoring capabilities and having practical application value. The study adopts a modular design, consisting of four core modules: human detection, posture estimation, behavior recognition, and result output. It can detect and warn of five dangerous behaviors such as throwing objects and climbing obstacles in real time. Among them, the posture estimation module addresses the shortcomings of the classic HRNet by adopting the improved SCite-HRNet model. This model optimizes computational efficiency and feature expression ability, ensuring accuracy (COCOval2017 dataset AP value of 65.9) while significantly reducing computational load (parameter quantity is only 18% of MobileNetV2), significantly improving its applicability on mobile devices. Experimental verification has demonstrated the effectiveness of the model improvement. The system is developed using PyTorch on the Ubuntu system, utilizing CUDA acceleration and multi-threading processing. It achieves a real-time processing speed of at least 25 frames per second on the RTX 2080Ti graphics card. Tests based on 15,000 multi-scenario labeled images show that the system has good robustness in complex environments. The system provides an intuitive visual monitoring interface, and the final classification accuracy reaches 99%.

View pdf

View pdf

The rapid progress in artificial intelligence (AI), especially in deep learning and reinforcement learning, is driving new opportunities in neuroscience, enabling innovative solutions to a range of brain-related problems. This paper explores how AI technologies can be applied to specific issues in brain science, particularly in the practical applications of brain-machine interfaces, neuroimaging analysis, and neural network modeling. Through a review of relevant literature and analysis of case studies, it highlights how AI, particularly deep and reinforcement learning, draws inspiration from neural mechanisms to effectively simulate and interpret brainwaves, imaging data, and other complex neurological signals. In particular, brain data like functional magnetic resonance imaging, electroencephalography, and electrical signals are analyzed using deep neural networks (DNN), convolutional neural networks (CNN), and reinforcement learning models. The performance of brain-machine interfaces is shown to be significantly enhanced, and notable improvements are observed in the early detection of neurodegenerative diseases. However, major challenges remain in AI applications, including the complexity of signal decoding, interference from data noise, and the high computational demands of real-time processing. The results show that integrating AI with brain science offers clear benefits but also presents challenges, highlighting the need for improved algorithms and stronger interdisciplinary collaboration in future research.

View pdf

View pdf

As intelligent human-computer interaction (HCI) evolves, the ability of systems to accurately perceive and respond to human emotions has become increasingly crucial. Emotional perception allows machines to adapt and react empathetically, making interactions more natural and engaging. This paper reviews current EEG-based emotion recognition techniques, focusing on key steps such as preprocessing, feature extraction, and machine learning models. Specifically, we explore various models like Support Vector Machine (SVM), Long Short-Term Memory (LSTM) networks, and Deep Belief Networks (DBN), all of which have demonstrated promising results in classifying emotional states from EEG signals. In addition, we compare some of the most recent approaches in the field, including MCD_DA—a method developed at Hebei University of Technology. This technique addresses the challenge of cross-subject adaptation, where recognising emotions in new individuals, not seen during training, is crucial for real-world applications. Many emotion recognition systems struggle with generalizing to new subjects due to individual differences in brainwave patterns. MCD_DA attempts to solve this problem, making the technology more robust and scalable.

View pdf

View pdf

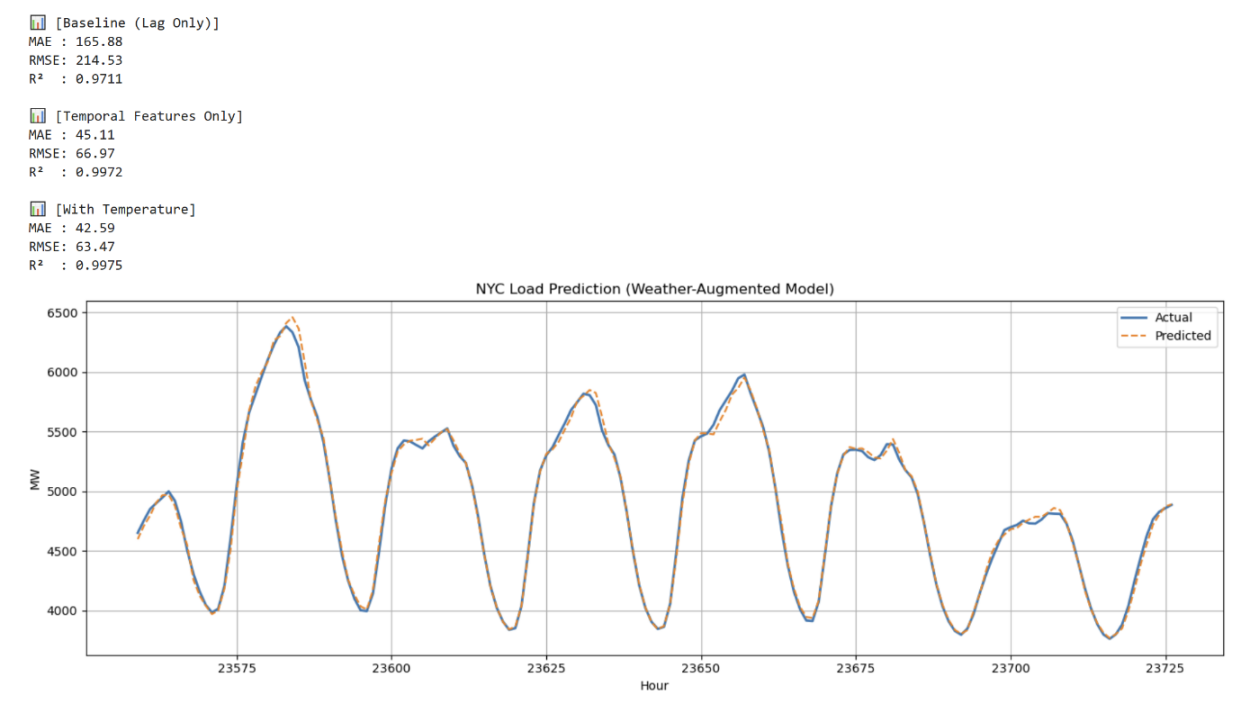

Understanding electricity consumption at a fine-grained spatial level is vital for equitable infrastructure planning in cities. This study analyzes electricity usage patterns across New York City using multiple Machine Learning models, including time series forecasting with Prophet, classification with Random Forest, and regression with ensemble models. This study examine 2021–2024 monthly electricity consumption data at the borough and neighborhood level to identify high-demand zones, assess prediction accuracy, and evaluate spatial disparities in energy allocation. Using a combination of Gini coefficients, model residuals, and geospatial visualization, the study reveals significant inequalities in model performance and projected load trends. These findings underscore the importance of integrating fairness diagnostics into urban energy modeling, even when using standard public datasets and minimal input features.

View pdf

View pdf

Alzheimer's disease (AD) is a primary brain degenerative disease that occurs in the elderly and pre - elderly. This disease will produce irreversible brain structural and molecular changes, leading to progressive cognitive and behavioral disorders. The disease has the characteristics of strong concealment and difficult early diagnosis, which also leads to a very difficult diagnosis. Therefore, with the rise of deep learning, researchers began to use image classification detection technology to assist in the diagnosis of Alzheimer's disease. This paper summarizes the application of image classification and detection technology in the diagnosis of Alzheimer's disease from three parts: the application of the single convolutional neural network method in diagnosis, the application of the attention mechanism and the convolutional neural network fusion method in diagnosis, and the application of the fusion model method in diagnosis. At the same time, this paper also makes a comparative analysis of the mainstream related databases, including data scale, queue diversity, data mode, research scenarios, and acquisition methods. The research in this paper not only provides the basis for researchers to select models and data, but also promotes the integration of image technology and Alzheimer's disease diagnosis.

View pdf

View pdf