1. Introduction

Social media web sites such as Twitter, Facebook, and YouTube have exploded in popularity. As a result, hate speech and hate-related activities are become increasingly common. The anonymity and mobility given by online media has made the cultivation and spread of offensive langauge. Hate speech is considered as any kind of communication, whether verbal, written, or physical, that is directed towards or uses disparaging or discriminatory words in reference to a person or a group based on who they are, such as their religion, ethnicity, or country. The impacts of hate crimes are already overwhelming due to the vast usage of social media and the anonymity enjoyed by internet users [1]. Although not everyone who engages in hate speech commits violent acts, many hate crime offenders attribute their motivation to hate speech on the internet. Hate speech directed towards minorities has the potential to mainstream prejudice, hate crime, and targeted violence. Text is a tremendously rich data source. Racism is the belief that various groups of people have different behavioral features that correspond to hereditary characteristics, and that these groups may be divided based on race superiority [2]. Expressions that have the potential to inspire fury or violence are known as intoxicating language [3].

The objective of this research work is to detect the problematic statements in a mix of Dravidian language comments that are stated in Tamil-English and acquired from social media. At the comment level each sentence is tagged with two different class labels. The data set was taken from a FIRE 2021 shared task that included YouTube comments and tweets. The proposed system must distinguish between offensive and non-offensive comments or messages. The major purpose is to identify blended sentences and apply a label that detects the text's sentiment, such as offensive or not offensive.

2. Literature Survey

A brief review is provided about nearly 150 deep learning-basedmodels implemented in recent years mainly for the classification of text [4-6]. They also mentioned technical methodologies, uniqueness of each model and applications. Overview of hate speech detection techniques, challenges and future directions are discussed in [7-11]. They provided a detailed survey that specifies key areas that have been adopted to automatically recognize the content of text using natural language processing techniques. In general authors collect and label their own data. Due to the lack of unique feature identification, doing analysis in social media data is a very challenging task [12-13]. They developed Deep Neural Network models and evaluated all models using a large hate speech dataset collected from Twitter. Models like Long Short Term Memory (LSTM) and Bi- directional Long Short-Term Memory (Bi-LSTM) were implemented and found that LSTM produced better precision, F1- Score and accuracy [14-16]. For toxic comments identification, Bi-LSTM produced better recall values in [17]. Data may be represented using multiple languages can be considered as code-mixed data which come from multilingual users is more difficult to analyze.

3. Proposed System

The proposed system is used to develop an effective method for classifying the Dravidian code-mixed data set obtained from the FIRE 2021 shared work into offensive and non-offensive categories. Various pre-processing techniques are performed to clean the data set throughout the data exploration phase. LSTM, BI-LSTM, CNN, and GRU are among the deep machine learning models used to develop models. Hybrid model such as combining CNN with LSTM, are also used to increase performance even further. The proposed system workflow is represented in Figure 1.

Figure 1. Proposed System Workflow.

3.1. Dataset Collection

The data is collected from a FIRE 2021 (HASOC) challenge in Dravidian Languages [20]. The data set contains code-mixed sentences in Tamil Dravidian Language. Train Dataset contains 5877 sentences, Test dataset contains 654 sentences and validation dataset contains 654 sentences. All of them are divided into two categories: offensive and non-offensive. The dataset includes the total number of comments from each of two classes and detailed description is presented in Table 1.

Table 1. Dataset Description.

Dataset | Number of Messages | Offensive | Non Offensive |

Training | 5876 | 4724 | 1153 |

Testing | 654 | 536 | 118 |

3.2. Data Preprocessing

The data can have many irrelevant and missing parts. To handle this part, data preprocessing is done. In data preprocessing we have used data cleaning to remove the emojis and used label encoder in order to change the category from Offensive or Not offensive to 0’s and 1’s. Data preprocessing flow is depicted in Figure 2.

Figure 2. Data Preprocessing.

3.3. Data Cleaning

The process of finding sections of data that are incorrect, incomplete, inaccurate, irrelevant, or missing, and then altering, replacing, or deleting them as needed is known as data cleaning. Data cleaning is considered a crucial part of data science.

3.4. Label Encoding

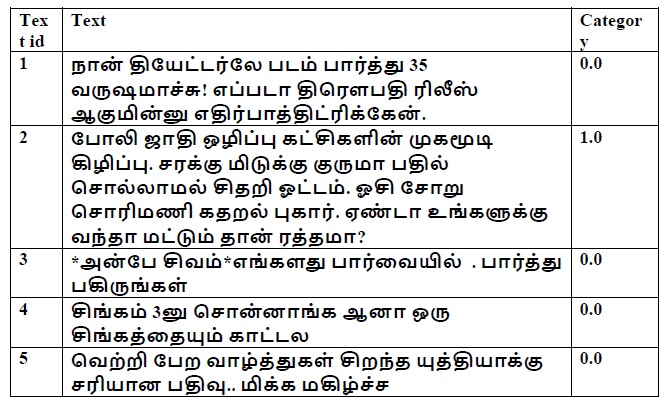

For categorical data, label encoding is a popular encoding approach. The process of converting labels into numeric representation so that machines can read them is known as label encoding. After applying label encoding technique, the resultant text and the corresponding category is represented in Figure 3.

|

Figure 3. Encoded Dataset. |

3.5. Building Deep Learning Models

For training, given the collected dataset as input with two classes OFF and NOT, the learned model has to predict the category based on various parameter tuning. LSTMs are a form of RNN that uses long short-term memory networks for storing intermediate results. The default behaviour of the LSTM is to remember information for long periods. A bidirectional LSTM differs from a standard LSTM in that its input flows in two ways. With a conventional LSTM, we may make input flow in one direction, either backwards or forwards. We may, however, have flow of information in both directions with bi- directional input, maintaining both the future and the past. Many layers of artificial neurons make up convolutional neural networks constructed from different layers of artificial neurons. These neurons are represented with mathematical functions similar to real neurons which calculate the activation value from activation function. Hybrid learning methods are a way to improve the intended outcome. In order to improve the projected result, this study work employs CNN and LSTM. Convolutional Neural Network layers are used to extract information from the input data, and LSTMs are used to forecast order. Gated Recurrent Unit features a forget gate component, the GRU is quite similar to the LSTM, but it lacks an output gate and hence has fewer parameters.

4. Performance Evaluation

Evaluation metrics considered for this project work will be Precision, Recall and F1-Score. The results of various deep learning models are tabulated below. Among these LSTM, Bi-LSTM, CNN, CNN-LSTM, GRU models, LSTM model gives the highest accuracy with recall. LSTM gives the best result with 81% accuracy with 2 epochs and various performance measures are presented in Table 2. With the sequential_4 model, the embedding layer contains 901248 parameters and 66 dense layers.

Table 2. LSTM Performance Measure.

Class Labels | Precision | Recall | F1-Score | Support |

NOT | 0.50 | 0.21 | 0.30 | 118 |

OFF | 0.85 | 0.95 | 0.90 | 536 |

Bi- LSTM gives the result with 80% accuracy with 2 epochs. With the sequential_1 model, the embedding layer contains 901248 parameters and 258 dense layers. The precision recall, F1-score values are presented in Table 3.

Table 3. Bi-LSTM Performance Measure.

Class Labels | Precision | Recall | F1-Score | Support |

NOT | 0.31 | 0.09 | 0.14 | 118 |

OFF | 0.83 | 0.96 | 0.89 | 536 |

CNN gives the result with 75% accuracy with 2 epochs. With the sequential_1 model, the embedding layer contains 6697800 parameters, 129 dense layers and 268928 ConvID. Withsequential_2 model, the embedding layer contains 901248 params,6 dense layers and 20608 ConvID.With sequential_3 model, embedding layer contains 901248 params,6 dense layers and 8224 ConvID. The precision recall, F1-score values are presented in Table 4.

Table 4. CNN Performance Measure.

Class Labels | Precision | Recall | F1-Score | Support |

NOT | 0.26 | 0.19 | 0.22 | 118 |

OFF | 0.83 | 0.88 | 0.85 | 536 |

CNN-LSTM gives the result with 79% accuracy with 50 epochs. With sequential_1 model, embedding layer contains 6697800 parameters,129 dense layers and 268928 ConvID.50 epochs have been run. The precision recall, F1-score values are presented in Table 5.

Table 5. CNN-LSTM Hybrid Model Performance Measure.

Class Labels | Precision | Recall | F1-Score | Support |

NOT | 0.36 | 0.23 | 0.28 | 118 |

OFF | 0.84 | 0.91 | 0.88 | 536 |

GRU gives the result with 81% accuracy with 1 epoch. With sequential_9 model, the embedding layer contains 901248 parameters and 258 dense layers. The precision recall, F1-score values are presented in Table 6.

Table 6. GRU Model Performance Measure.

Class Labels | Precision | Recall | F1-Score | Support |

NOT | 0.42 | 0.17 | 0.24 | 118 |

OFF | 0.84 | 0.95 | 0.89 | 536 |

5. Conclusion

The models are able to classify datasets as offensive or non-offensive for FIRE 2021 HASOC challenge in Dravidian Languages. In this research work various models like LSTM, BI-LSTM, CNN, GRU and hybrid models like CNN with LSTM were implemented. Out of all these models LSTM and GRU models achieved high accuracy of 81% to detect the offensive text with the given dataset. In future, the accuracy could be improved by employing transfer learning methodology.