1. Introduction

1.1. Regulatory Compliance Challenges in Digital Service Platforms

Digital service platforms operate across multiple jurisdictions with heterogeneous regulatory environments, creating significant compliance complexity. These platforms frequently handle substantial volumes of user data while offering diverse services, exposing them to numerous compliance risks under frameworks like the Digital Services Act (DSA) [1]. Multi-product digital platforms face particular difficulties in monitoring and demonstrating compliance due to their distributed architecture and varied service offerings. The verification of adherence to regulatory requirements remains predominantly manual, resource-intensive, and error-prone across these environments. According to Barati et al. (2020) [2], "evaluating the compliance of cloud-hosted services is one of the most costly activities and remains a manual activity achieved through audits and reporting." This challenge magnifies as platforms scale, with monitoring needs spanning content moderation, algorithmic transparency, risk management, and user data protection practices. Modern digital platforms must navigate compliance requirements across jurisdictional boundaries while maintaining operational efficiency [3]. The technical complexity of implementing real-time monitoring systems capable of operating across heterogeneous platform environments presents substantial engineering barriers. Costa Junior (2020) [4] notes that "mobile application testing imposes several new challenges and several peculiarities," which similarly applies to monitoring compliance across digital service platforms [5].

1.2. Digital Services Act: Scope and Compliance Requirements

The Digital Services Act represents a comprehensive regulatory framework aimed at ensuring transparency, accountability, and user protection across digital services in the European Union. The DSA establishes graduated obligations based on platform size and role, with particularly stringent requirements for very large online platforms [6]. Key compliance domains include content moderation systems, recommender systems transparency, risk assessment frameworks, advertising transparency, and data access for researchers. The regulation mandates that platforms establish robust mechanisms to track user reports and appeals, which according to Wang (2022) [7], resulted in "annual savings of approximately $1 billion" when properly implemented. DSA compliance necessitates maintaining detailed records of platform activities, implementing systematic risk management approaches, and providing regulatory authorities with access to compliance documentation. The regulatory framework emphasizes algorithmic transparency requirements, mandating that platforms disclose information about automated decision-making processes. Platforms must implement proportionate and effective internal compliance structures to monitor adherence to DSA provisions continuously. The DSA explicitly requires the maintenance of audit trails and systematic documentation of compliance efforts, creating technical and operational challenges for implementation [8].

2. Conceptual Framework for Automated Compliance Verification

2.1. Formalization of DSA Requirements for Algorithmic Processing

The Digital Services Act contains numerous natural language requirements that must be transformed into machine-processable specifications for automated monitoring [9]. This formalization process involves decomposing regulatory text into atomic requirements, classifying these requirements according to their compliance domain, and expressing them in a structured representation suitable for algorithmic processing [10]. The requirements formalizations must capture both explicit obligations and implicit constraints while preserving the semantic integrity of the original regulatory text [11]. Costa Junior (2020) emphasizes that "non-functional requirements specify criteria that can be used to judge the operation of a system rather than specific behaviors," which applies directly to many DSA provisions [12,13]. A formal representation of DSA requirements necessitates the development of a domain-specific language that can express conditional obligations, temporal constraints, and quantitative thresholds. The formalization must accommodate various requirement types including access controls, temporal restrictions, sequence dependencies, and data protection obligations. Requirement formalization techniques must address ambiguities in regulatory language through explicit semantic mappings between natural language terms and their formal counterparts. Segura et al. (2017) discuss "the hypothesis of applying metamorphic testing as an effective and practical approach to addressing non-compliance defects in NFRs," providing a foundation for formalizing regulatory requirements for automated verification [14].

2.2. Metamorphic Testing Principles for Regulatory Compliance

Metamorphic testing provides a systematic approach to compliance verification by establishing relationships between inputs and outputs of digital service operations without relying on precise test oracles. This technique proves valuable for compliance verification where exact expected outputs may be undefined but relationships between different execution scenarios can be specified. The application of metamorphic testing to regulatory compliance involves defining metamorphic relations that encode compliance constraints and using these relations to generate test cases that verify compliance properties. Metamorphic relations for DSA compliance encode regulatory constraints as verifiable properties that must hold across different platform states and operations. Costa Junior (2020) notes that "metamorphic testing is an approach that has been applied in many domains as a strategy for generating new test cases and an alternative to alleviate the oracle problem [15]." This approach addresses the oracle problem in compliance verification where exact expected behaviors may not be precisely specified in regulations. Metamorphic relations can be established for various compliance domains including content moderation, algorithmic transparency, risk management, and user data protection practices. The definition of metamorphic relations requires domain expertise to translate regulatory requirements into verifiable properties that capture the intent of compliance obligations. The effectiveness of metamorphic testing for regulatory compliance depends on the comprehensiveness of the defined relations and their coverage of DSA requirements.

3. Machine Learning Architecture for Multi-Product Monitoring

3.1. Compliance Indicators Feature Engineering and Data Extraction

Machine learning approaches to DSA compliance monitoring require robust feature engineering to transform platform activities into structured representations suitable for automated analysis. The extraction of compliance-relevant features involves processing heterogeneous data sources including platform logs, user activity records, content moderation decisions, and algorithmic performance metrics. Features must capture both explicit compliance indicators such as response times and implicit indicators such as content classification accuracy. Gupta et al. (2021) developed "BISRAC" which includes an approach where "RPN is calculated as product of three base metrics: Severity, Occurrence, Detection against each attack," demonstrating how feature engineering enables risk quantification. Table 1 presents the primary compliance indicator categories derived from DSA requirements, mapping regulatory domains to measurable features.

Table 1: DSA Compliance Indicator Categories and Corresponding Features

|

Compliance Domain |

Feature Category |

Feature Examples |

Data Sources |

|

Content Moderation |

Response Metrics |

Time-to-action, Decision consistency |

Moderation logs |

|

Transparency |

Disclosure Metrics |

Recommendation explanation completeness |

API responses |

|

Risk Management |

Risk Indicators |

Detected risk patterns, Mitigation effectiveness |

Risk assessment reports |

|

User Protection |

Protection Metrics |

Ad transparency scores, Data access controls |

User interface audit logs |

The feature extraction process must address significant challenges including data quality variations across platforms, missing values in compliance records, and inconsistent data representations. Table 2 outlines the feature extraction methods applied to different data types encountered in multi-product environments.

Table 2: Feature Extraction Methods for Different Data Types

|

Data Type |

Extraction Method |

Preprocessing Requirements |

Normalization Approach |

|

Temporal Data |

Time series extraction |

Temporal alignment, Gap filling |

Min-max scaling |

|

Textual Content |

NLP-based feature extraction |

Tokenization, Entity recognition |

TF-IDF vectorization |

|

Numerical Metrics |

Statistical aggregation |

Outlier detection, Imputation |

Z-score normalization |

|

Categorical Data |

One-hot encoding |

Category standardization |

Frequency encoding |

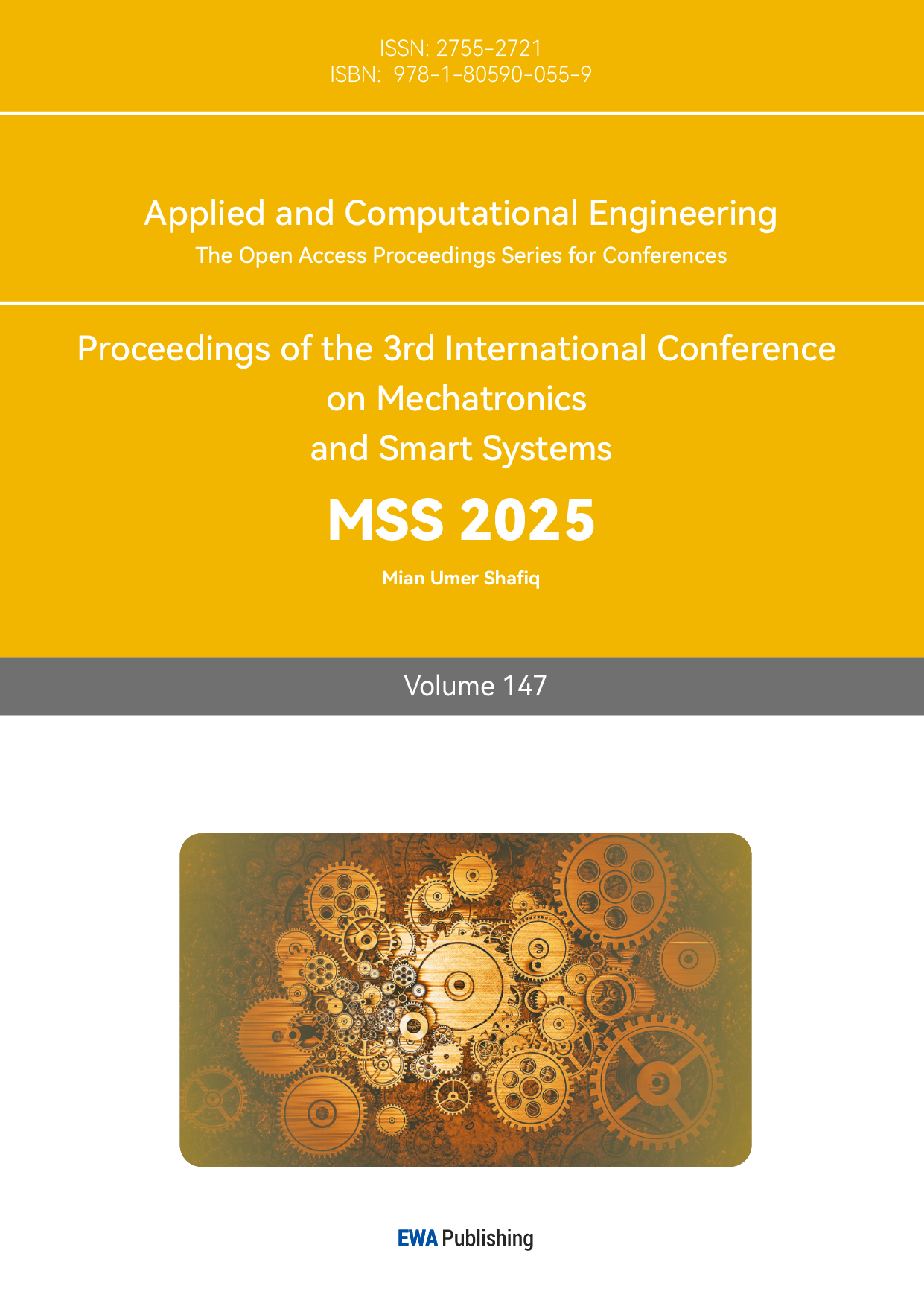

The implementation of feature engineering pipelines requires balancing computational efficiency with feature expressiveness to enable real-time monitoring capabilities. Figure 1 illustrates the comprehensive machine learning pipeline for feature extraction and processing in DSA compliance monitoring.

Figure 1: Machine Learning Pipeline for DSA Compliance Feature Engineering

The figure depicts a multi-stage processing pipeline with data collection modules on the left that gather inputs from various platform services (content moderation, user-facing APIs, recommendation systems, advertising systems). The central processing stages include data cleaning, feature extraction (with parallel paths for different data types), feature transformation, and selection modules. The right side shows the final feature vectors organized by compliance domain with temporal metadata attachments. The architecture implements feedback loops from monitoring outcomes back to feature selection to optimize relevance. Different compliance domains are represented in color-coded processing paths with data flow indicators showing cross-domain feature relationships.

3.2. Digital Services Act Compliance Hybrid Risk Assessment Model

The compliance risk assessment model incorporates supervised and unsupervised learning approaches to classify platform activities according to their compliance status and risk level. Supervised components utilize labeled compliance cases to train classifiers that identify potential violations, while unsupervised components detect anomalous patterns that may indicate compliance risks without prior examples. Gupta et al. (2021) demonstrated that "BRPN = RPN (Customer Impact) (Integrity Impact) (Availability Impact) (Confidentiality Impact)," showcasing how multiple factors contribute to comprehensive risk assessment. The hybrid approach addresses the challenge of limited labeled training data through transfer learning from related compliance domains and synthetic data generation techniques. Table 3 presents the risk assessment metrics and their relative weights in the overall risk score computation.

Table 3: Risk Assessment Metrics and Their Weights in Compliance Risk Scoring

|

Risk Category |

Assessment Metric |

Weight (%) |

Detection Method |

Confidence Threshold |

|

Procedural Compliance |

Process adherence score |

25 |

Rule-based classification |

0.85 |

|

Temporal Compliance |

Deadline adherence rate |

20 |

Temporal logic verification |

0.90 |

|

Content Compliance |

Content policy alignment |

30 |

Neural text classification |

0.75 |

|

Transparency Compliance |

Explanation completeness |

15 |

Semantic similarity scoring |

0.80 |

|

User Protection |

Data handling compliance |

10 |

Pattern detection |

0.90 |

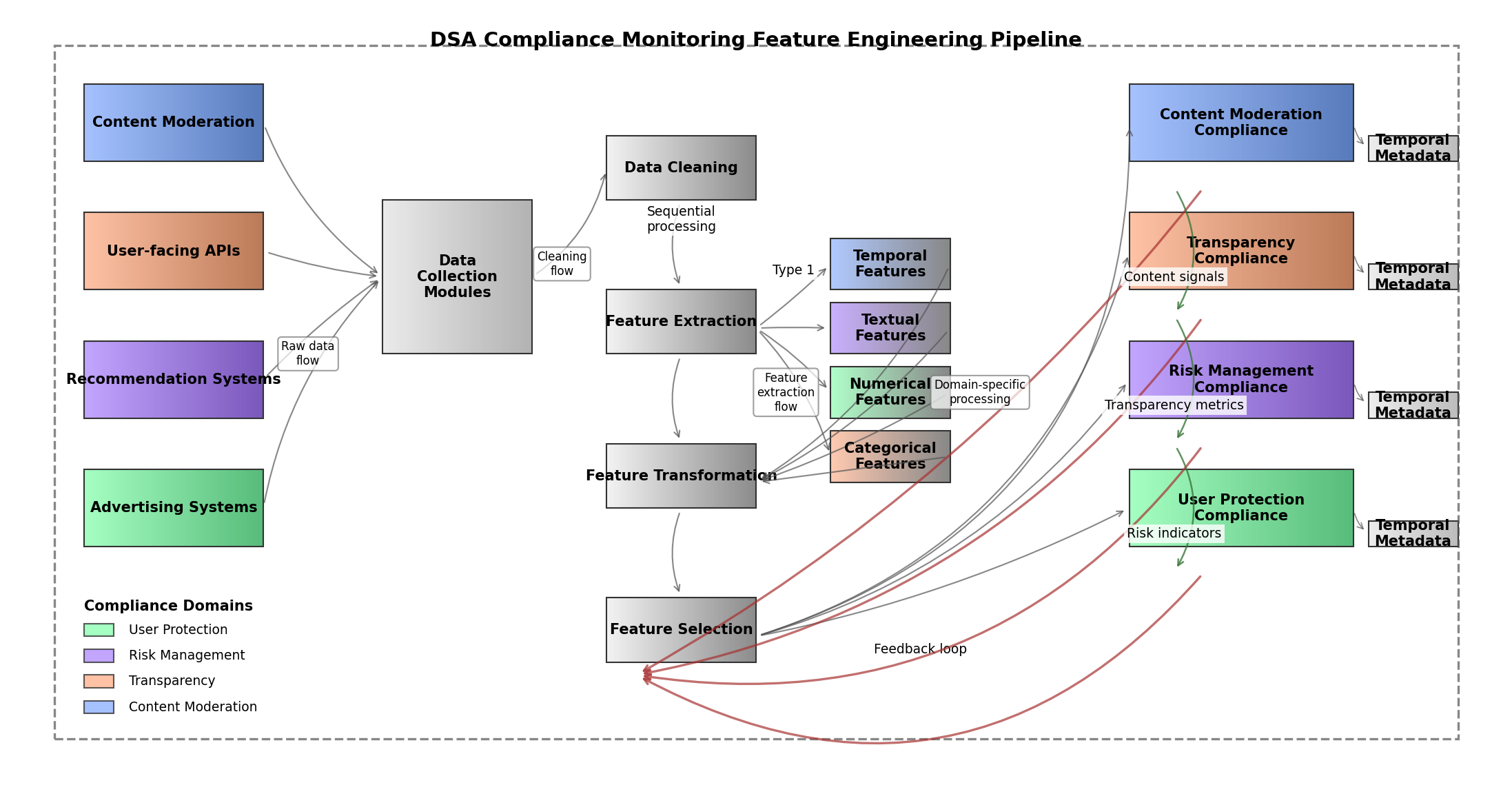

The risk assessment model architecture incorporates multiple specialized models, each focused on specific compliance domains with domain adaptation techniques to address platform-specific variations. Barati et al. (2020) utilized "timed automata in Uppaal" for verification, which informs our temporal risk assessment components. Figure 2 illustrates the neural network architecture for the hybrid risk assessment model.

Figure 2: Neural Network Architecture for DSA Compliance Risk Assessment

The figure illustrates a complex neural architecture with multiple interconnected components. The bottom layer shows input features organized by compliance domain, feeding into specialized feature processing modules. The middle layers implement domain-specific neural networks (CNNs for content analysis, RNNs for temporal sequences, transformers for textual content) that process features independently. The architecture includes cross-domain attention mechanisms represented by dotted connections between domain-specific networks. The upper layers show progressive feature fusion through self-attention mechanisms culminating in risk assessment outputs. Skip connections indicate how domain expertise is incorporated through regularization pathways, while uncertainty quantification modules appear as parallel assessment streams providing confidence scores alongside risk predictions.

3.3. Multi-Product Environment Real-time Monitoring System Design

The real-time monitoring system design addresses the technical challenges of continuous compliance verification across heterogeneous product environments. The architecture implements distributed monitoring components deployed across platform services, centralized analysis engines, and visualization interfaces for compliance reporting. The system design balances computational efficiency with monitoring comprehensiveness through adaptive sampling techniques that adjust monitoring intensity based on risk assessments. Huang (2024) noted that "software testing on mobile apps refers to different types of testing methods to be applied to different types of applications (native, hybrid, and web)," which similarly applies to monitoring diverse digital services. The monitoring system implements incremental verification techniques that optimize resource utilization by focusing on changed components rather than full system verification at each cycle. Table 4 presents system performance metrics across different platform types and operational conditions.

Table 4: Monitoring System Performance Metrics Across Platform Types

|

Platform Type |

Processing Latency (ms) |

Throughput (events/sec) |

Detection Accuracy (%) |

False Positive Rate (%) |

Resource Utilization (%) |

|

Content Platforms |

145 |

15,000 |

93.2 |

2.8 |

35 |

|

E-commerce Services |

210 |

8,500 |

95.6 |

1.9 |

42 |

|

Communication Tools |

95 |

22,000 |

91.8 |

3.5 |

28 |

|

Cloud Infrastructure |

180 |

12,000 |

94.3 |

2.2 |

38 |

|

Integrated Platforms |

230 |

7,500 |

96.7 |

1.5 |

45 |

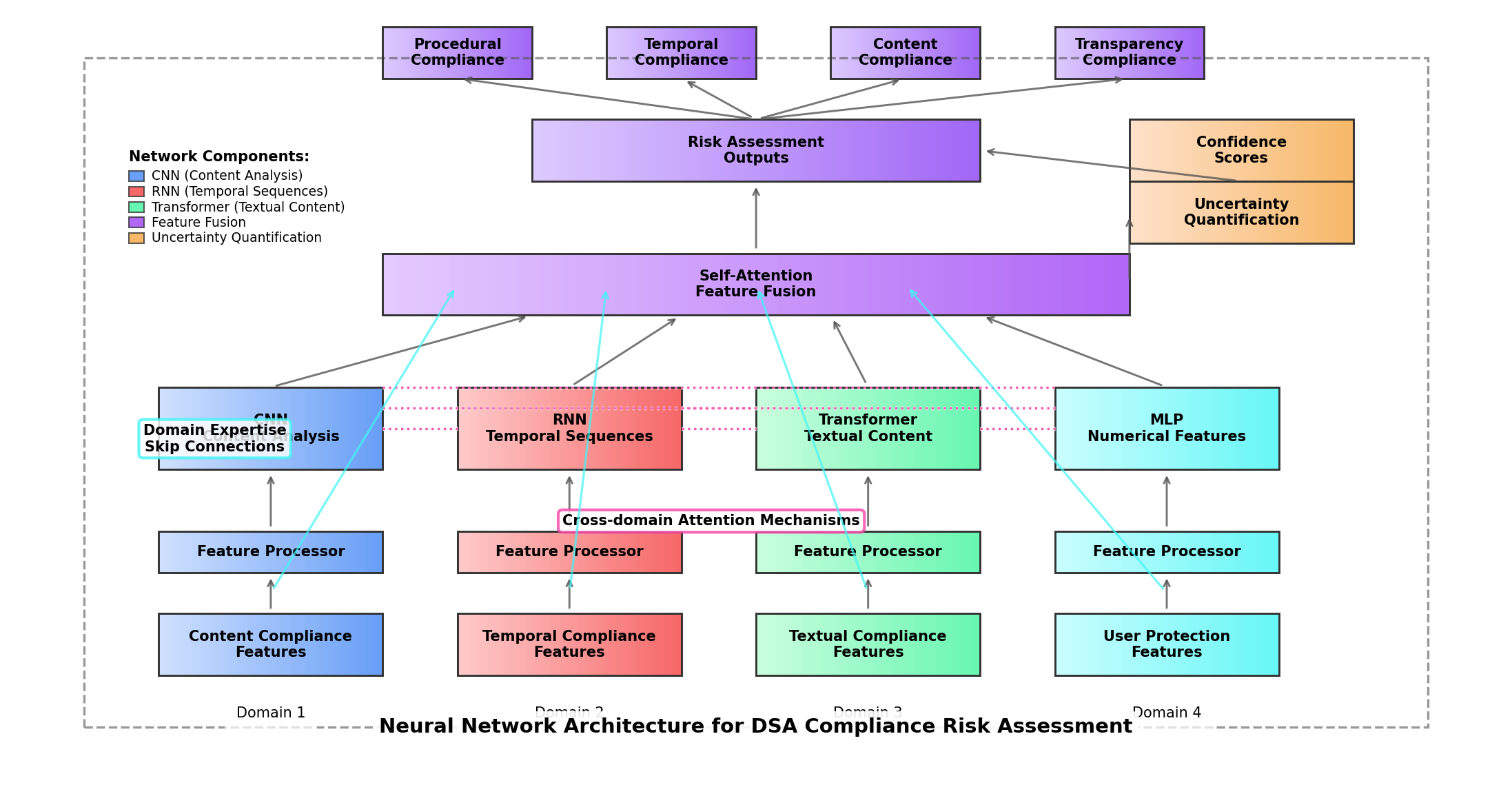

The system architecture includes specialized components for different compliance domains, optimization techniques for real-time performance, and integration interfaces for platform-specific adaptations. Xu et al. (2024) proposed "automated compliance verification of fund activities" which informs our monitoring approach for digital services. Figure 3 provides a comprehensive view of the system architecture for real-time compliance monitoring.

Figure 3: System Architecture for Real-time Compliance Monitoring in Multi-Product Environments

The diagram presents a multi-layered architecture with platform-specific data collectors at the bottom layer interfacing with various digital services through standardized APIs. The middle layers contain data processing modules (stream processors, batch analyzers, data transformation services) feeding into a central monitoring engine. The monitoring engine implements parallel compliance verification processes for different DSA requirements, with temporal verification components highlighted. The architecture features horizontal scaling capabilities for high-throughput environments and vertical specialization for complex compliance domains. The top layer shows management interfaces, alerting systems, and regulatory reporting modules with bidirectional information flows. Cross-cutting concerns like security, data protection, and system health monitoring appear as vertical components spanning all layers with dedicated resources for performance optimization.

4. Implementation and Evaluation Strategy

4.1. Multi-Platform Data Integration and Processing Pipeline

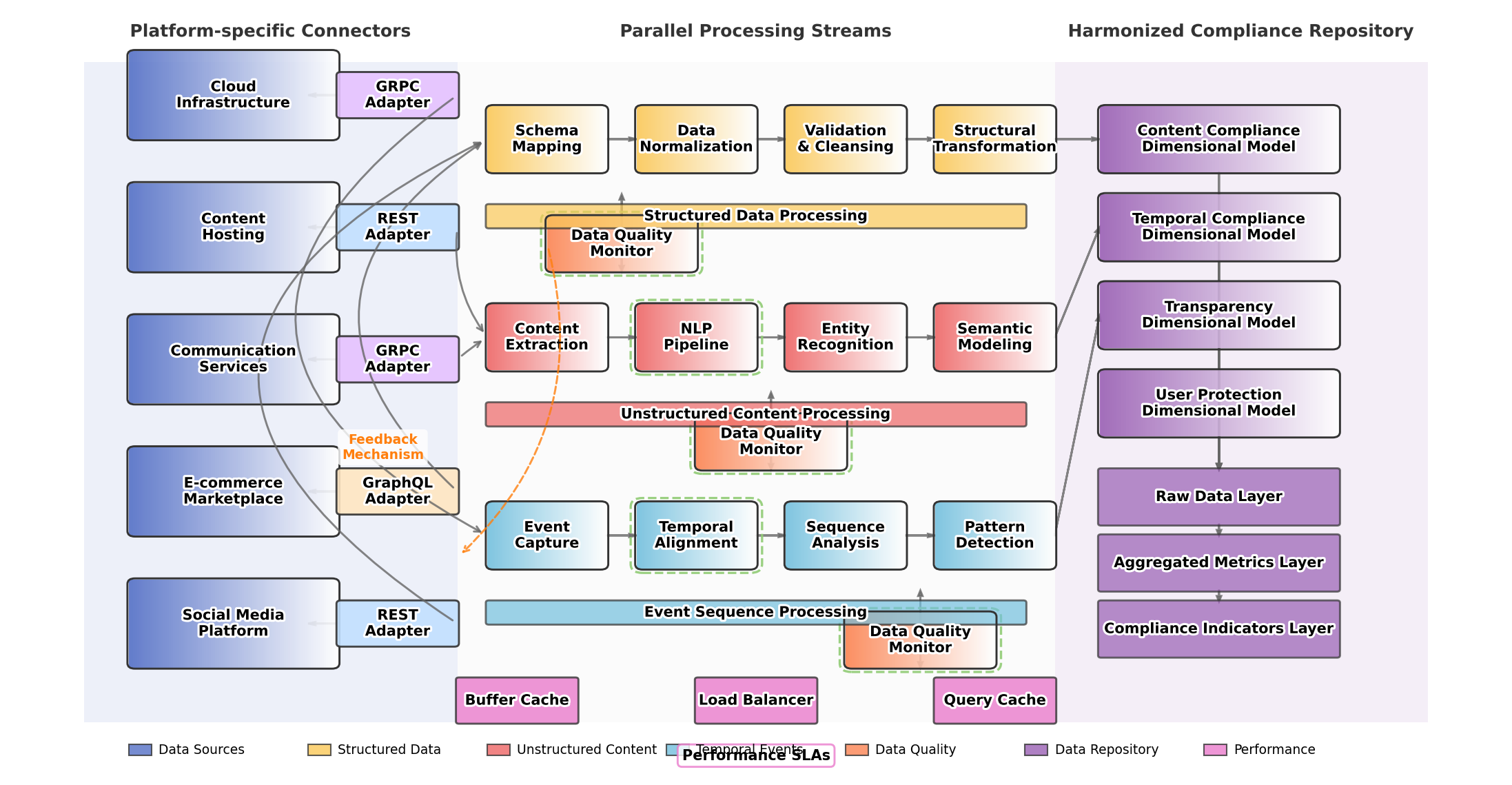

The implementation of DSA compliance monitoring systems necessitates robust data integration mechanisms capable of ingesting and processing heterogeneous data from multiple digital service platforms. The data integration architecture must address variations in data formats, schema structures, and access patterns across diverse platform environments. Wang (2024) noted how their implementation "developed a system enabling Google users to track the status of their reports and appeals," demonstrating effective data collection across complex systems. The integration pipeline architecture consists of specialized connectors for platform-specific APIs, transformation modules for data normalization, and staging repositories for temporary storage during processing. The data processing pipeline implements parallel processing streams optimized for different data types, with specialized modules for structured, semi-structured, and unstructured content. Ni (2024) emphasized that "mobile applications have some additional requirements that are less commonly encountered in traditional software applications," which similarly applies to data processing requirements for diverse digital platforms. The processing pipeline includes data quality assessment modules that evaluate completeness, accuracy, and timeliness of compliance-related information. Figure 4 illustrates the comprehensive data integration and processing architecture implemented for DSA compliance monitoring.

Figure 4: Multi-Platform Data Integration and Processing Architecture

The figure depicts a complex data pipeline architecture with multiple interconnected components spanning from data source systems to compliance analysis outputs. The left side shows platform-specific connectors with protocol adapters (REST, GraphQL, GRPC) connecting to various digital services. The middle section illustrates parallel processing streams with specialized paths for different data types (structured data processed through normalization and validation; unstructured content through NLP pipelines; event sequences through temporal processing). The architecture includes data quality monitoring modules intersecting each processing path with feedback mechanisms to source systems. The right side shows the harmonized compliance data repository with dimensional models organized by compliance domains and hierarchical aggregation layers. Performance optimization components appear as cross-cutting concerns with buffers, caches, and load balancing mechanisms deployed throughout the pipeline to maintain processing SLAs.

4.2. Performance Metrics and Validation Methodology

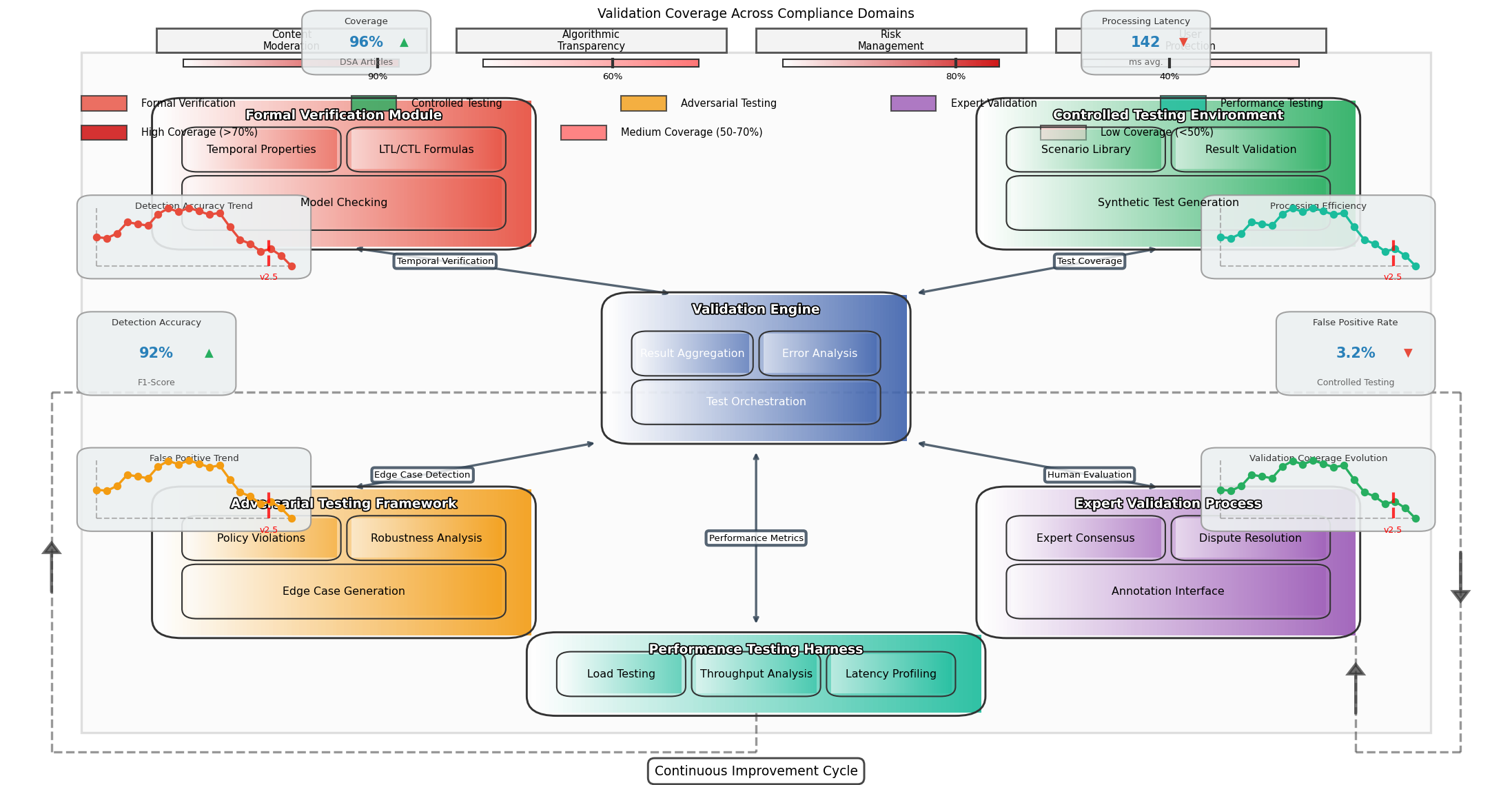

The evaluation of automated compliance monitoring systems requires comprehensive performance metrics and validation methodologies that assess both technical capabilities and compliance effectiveness. The evaluation framework encompasses computational performance metrics such as processing latency and throughput alongside compliance-specific metrics including detection accuracy and coverage. Rao et al. (2024) developed specific "temporal logic formulas" for verification, which serves as inspiration for our validation methodology. The validation methodology incorporates multiple testing approaches including controlled experiments with synthetic data, comparative analysis against manual assessments, and blind testing by compliance experts. Ma et al. (2024) noted how "standards emphasize organizational aspects and have limited product orientation," informing our approach to validation against formal requirements. The validation methodology implements a continuous validation pipeline that automatically executes test suites against system updates, ensuring sustained compliance effectiveness. Figure 5 illustrates the validation workflow implemented for the compliance monitoring system.

Figure 5: Validation Methodology for DSA Compliance Monitoring

The figure presents a comprehensive validation framework with multiple testing phases represented as interconnected workflows. The central validation engine orchestrates multiple specialized validation components including: formal verification modules (applying model checking to temporal properties), controlled testing environments (with synthetically generated compliance scenarios), adversarial testing frameworks (systematically exploring edge cases), expert validation processes (with configurable annotation interfaces), and performance testing harnesses (measuring system behavior under various load profiles). The diagram employs color gradients to indicate validation coverage levels across different compliance domains, with darker shades representing higher validation intensity. Bidirectional arrows show how validation results feed back into system optimization, creating a continuous improvement cycle. Performance metrics appear as dashboard elements surrounding the main workflow, with time-series visualizations tracking validation effectiveness over multiple system versions.

4.3. Case Studies: Compliance Monitoring Across Digital Service Categories

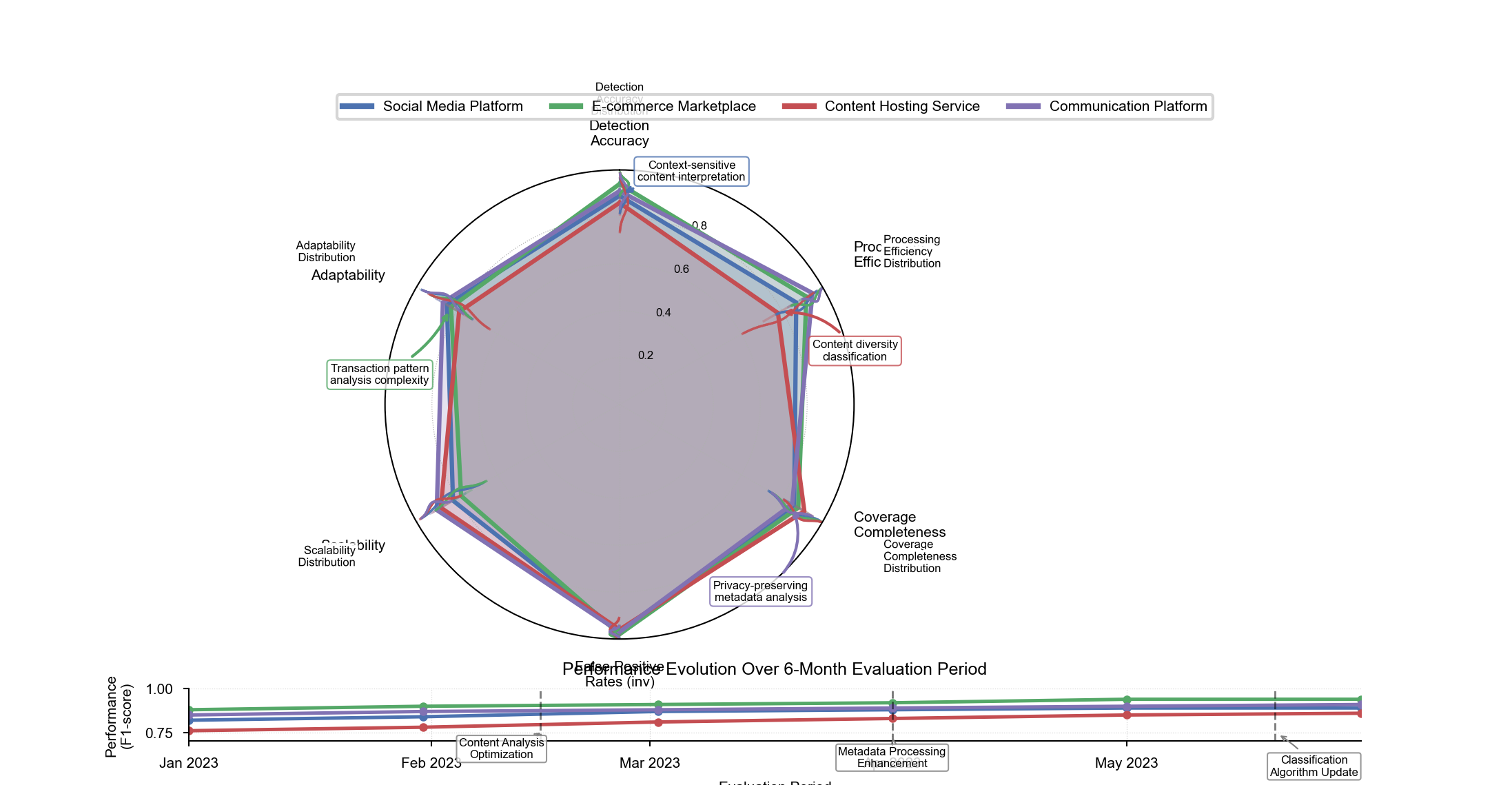

The implementation of the DSA compliance monitoring system was evaluated across multiple digital service categories through controlled case studies designed to assess technical performance and compliance effectiveness. The case studies encompassed diverse platform types including social media services, e-commerce platforms, content hosting services, and integrated digital environments. Ma et al. (2024) described how "BISRAC can be used iteratively in banks to aid them to assess current information security posture," which parallels our iterative evaluation across digital service categories. The case studies revealed significant variations in monitoring effectiveness across platform types, with content-focused platforms requiring more specialized processing compared to transaction-oriented services. Ma et al. (2024) proposed techniques for "extracting monitoring rules from legislation and fund documentation," which influenced our approach to adapting monitoring rules across service categories. Figure 6 presents the comparative monitoring performance across case study platforms, highlighting domain-specific effectiveness variations.

Figure 6: Comparative Monitoring Performance Across Digital Service Categories

The figure displays a multi-dimensional performance comparison across the four case study platforms. The visualization uses a radar chart design with multiple performance dimensions radiating from the center (detection accuracy, processing efficiency, coverage completeness, false positive rates, scalability, and adaptability). Each platform category appears as a colored polygon overlay, with area size indicating overall monitoring effectiveness. The chart is augmented with statistical confidence intervals shown as translucent bands around each polygon, representing performance variability under different operational conditions. Specialized monitoring challenges appear as annotations at the polygon vertices where performance deviations are most significant. The visualization incorporates mini-charts embedded at each axis endpoint showing detailed performance distributions for that specific metric. A timeline element at the bottom tracks performance evolution over the six-month evaluation period, with event markers indicating when monitoring system optimizations were deployed.

The case study results validated the adaptability of the monitoring architecture to diverse platform environments while identifying specific challenges in content-focused services where context interpretation significantly impacts compliance assessment accuracy.

5. Challenges and Future Research Directions

5.1. Addressing Technical Barriers and Data Protection Constraints

Automated compliance monitoring systems face significant technical barriers related to data access, processing capabilities, and privacy constraints. The implementation of machine learning models for compliance verification requires access to representative training data while respecting data protection regulations, creating an inherent tension between monitoring effectiveness and privacy preservation. Data protection regulations limit the collection and processing of personal data, restricting the features available for compliance monitoring models. Fan et al. (2024) noted that "privacy concerns associated with the use of this data have led to legal regulations that impose restrictions on how such data is requested or processed," highlighting the fundamental challenge for monitoring systems. Technical solutions including privacy-preserving machine learning techniques, federated learning approaches, and differential privacy implementations offer potential pathways to balance monitoring requirements with privacy constraints. The development of privacy-by-design monitoring architectures requires embedding data protection principles into the core system design rather than implementing them as external constraints. The advancement of zero-knowledge proof techniques and secure multi-party computation creates opportunities for verifying compliance properties without accessing raw platform data. These approaches must be integrated with existing monitoring architectures to enhance privacy protection while maintaining verification capabilities.

5.2. Adaptation to Evolving Regulatory Frameworks

The Digital Services Act represents an evolving regulatory framework that will continue to develop through implementation guidelines, court interpretations, and potential amendments. Compliance monitoring systems must adapt to these regulatory changes while maintaining operational continuity and verification effectiveness. The development of adaptive monitoring architectures requires modular design approaches where compliance rules can be updated without disrupting the underlying monitoring infrastructure. Wei et al. (2024) emphasized that "the banking sector must adapt to comply with regulations and leverage technology's opportunities to personalize customer experiences," which similarly applies to digital service platforms adapting to regulatory frameworks. Machine learning models must incorporate continuous learning capabilities to adapt to evolving interpretations of compliance requirements without complete retraining cycles. The implementation of regulatory change management processes within monitoring systems enables systematic tracking of requirement modifications and their impact on verification approaches. Monitoring systems must incorporate feedback mechanisms that capture compliance decisions from human experts and regulatory authorities to enhance adaptation capabilities. The development of computational legal reasoning components within monitoring systems offers potential for automated interpretation of regulatory updates and their translation into operational verification rules.

5.3. Integration with Broader Compliance Management Systems

Automated compliance monitoring systems operate within broader organizational compliance frameworks that encompass manual processes, governance structures, and reporting mechanisms. The effective integration of monitoring systems with these broader frameworks requires standardized interfaces, consistent compliance taxonomies, and coordinated verification approaches. The alignment of automated monitoring outputs with organizational compliance reporting structures enables consistent documentation of compliance status across digital service operations. Ma et al. (2024) proposed "extracting monitoring rules from legislation and fund documentation and at providing automated support for enabling the runtime verification," demonstrating the importance of integrated approaches to compliance management. The incorporation of explainable AI techniques within monitoring systems enhances the interpretability of automated compliance assessments for human reviewers and regulatory authorities. The development of standardized compliance interfaces enables interoperability between monitoring systems and broader governance, risk, and compliance platforms. The integration of automated monitoring with incident management systems creates efficient workflows for addressing detected compliance issues through coordinated remediation activities. The advancement of compliance analytics capabilities across integrated systems enhances organizational ability to identify systemic compliance patterns and implement preventative controls. These integration approaches must address variations in compliance maturity across organizations through adaptable implementation models.

Acknowledgment

I would like to extend my sincere gratitude to Chaoyue Jiang, Guancong Jia, and Chenyu Hu for their groundbreaking research on cultural analytics and machine learning applications in digital content localization as published in their article titled "AI-Driven Cultural Sensitivity Analysis for Game Localization: A Case Study of Player Feedback in East Asian Markets"[14]. Their innovative methodology for automated cultural sensitivity analysis has significantly influenced my understanding of cross-cultural data processing techniques and provided valuable inspiration for my own research in automated compliance monitoring across diverse digital platforms.

I would also like to express my heartfelt appreciation to Jiaxiong Weng and Xiaoxiao Jiang for their innovative study on movement analysis using artificial intelligence techniques, as published in their article titled "Research on Movement Fluidity Assessment for Professional Dancers Based on Artificial Intelligence Technology"[15]. Their comprehensive approach to feature extraction from complex temporal sequences and their machine learning model architecture have significantly enhanced my knowledge of pattern recognition in dynamic systems and inspired the temporal verification components in my research framework.