1. Introduction

With the development of technologies and the rising needs for the advent of new forms of entertainment, XR products, an emerging generation of integration and innovation in Information Technology (IT), are gaining more and more public attention nowadays.

XR is a general term for Virtual Reality (VR) and Augmented Reality (AR). While VR products give users a feeling of immersion in the virtual world by employing 3D near-eye displays and tracking real-time body movements [1]. AR products mix the real world and the 3D content generated by computers. Besides, AR products cover multiple sensors, such as visual and olfactory.

Some big IT firms, such as Facebook, are interested in XR and they have invested a lot in the IT industry [2]. It is predicted that the overall investment in XR will reach $74.73 billion in 2026. By that time, XR technology will be widely used in education, healthcare, video games, live streaming, engineering and so on [3].

Therefore, under this phenomenon, researching XR products is reasonable. This is because under the flourish of the use of XR products, some problems remain unsolved. Therefore, providing new views on those unsolved issues is important. Also, I am keen on researching the latest technology. By the way, researching the hardware of XR products may help me get an insight in electrical engineering, which is my dream major. Moreover, it is extremely exciting to get a closer look at this technology because XR products are so cool that they seem to come from the future and have already been the future trend of electronic devices.

In the literature review part, this article talks about different types of XR, three cutting-edge XR products, basic technologies of hardware, a general description of software, and limitations that prevent the achievement of immersive experience. Followed by the discussion part, this article talks about the following parts give an introduction to Apple Vision Pro and show the detailed structure of Apple Vision Pro, followed by core technologies used by Apple Vision Pro and its drawbacks.

The novelty of this article is that it gives four factors that influence immersive experience in the Literature Review part and it carries out a case study of Apple Vision Pro in the Discussion part, taking an insight into its detailed structure and its unique technologies, and how the technologies solve the problem of lack of immersion. Moreover, also in the discussion part, this article analyses Apple Vision Pro through its display, chips and sensors and makes comparison between other XR products. Then it concludes that Apple Vision Pro makes a breakthrough and stands out from existing XR products. It is leading other XR products to achieve a higher level. Besides, this article researches some existing XR products like Microsoft HoleLens2 to see the reasons why they cannot achieve a highly immersive experience.

The following parts talk about different types of XR, three cutting-edge XR products, basic hardware technologies, a general description of software and limitations that prevent the achievement of immersive experience.

1.1. Types of XR

As shown in Figure 1, the composition of XR. From left to right, they are VR, AR and then MR. In this article, VR and AR would be mentioned.

First of all, according to the definition, VR and AR differ from the users’ experience. VR is a place where users can only be immersed in the “Virtual Reality” produced by the equipment they wear and this strengthens users’ experience in the virtual world. By contrast, AR is a mixture of both the virtual and real world, which makes it possible for users to see the reality while looking through information generated by the headsets. In this sense, it prevents users from getting hurt as users who use AR equipment can see the condition of the real world while reducing the realism and reproducibility of the virtual reality.

1.2. Cutting-edge products

In this part, this article analyses three cutting-edge products and displays their features, parameter.

1.2.1. Microsoft HoloLens2 (MH2)

As shown in Figure 2, MH2 was released in 2019. It weighs 566 grams, with a field of view of 96.1 degrees and a resolution per eye reaches 2048 x 1080 pixels [5]. The part of the display screen is more sophisticated as the displaying part (the glasses) is adjustable, which means that it can rotate 90 degrees from vertically to horizontally. This invention can help users relieve fatigue and makes it convenient when users talk with others (as they do not have to take off the whole set of the headset) [6]. In addition, MH2 can be equipped with supernumerary devices and therefore can adapt to the needs of different environments, such as helmets, which can be used in the construction field [6].

1.2.2. Meta Quest 3 (MQ3)

As shown in Figure 3, MQ3 was released in 2023 and weighs only 515 grams (the lightest among the three). Noticeably, the resolution per eye increased from 1832 x 1920 pixels in Meta Quest 2 to 2064 x 2208 pixels in MQ3 [7]. Also, the using of a fully movable dual display gives MQ3 a wider field of view, reaching the figure of 110°+10-15% [8]. Users can browse websites and use applicants like WhatsApp to call their friends. Moreover, MQ3 has a better sound quality than MQ2, achieved by better bass performance and increased volume output range by 40% [9]. Besides, MQ3 uses handles for operations but games are customized because the designs of the games depend on the space users are in by using a total of three cameras and sensors to scan the space and then model it [7]. To be more specific, some games in MQ3 combines the use of VR and AR, which is the selling point of MQ3 [10]. For example, games like Drop Dead: The Cabin, Eleven: Table Tennis, and Blaston are all XR games [11].

1.2.3. PlayStation VR2

As shown in Figure 4, The PlayStation VR2 weighs 560 grams. It was released on February 22, 2023, and it is used with PlayStation 5 [13]. There are a lot of innovations in this product, which makes it quite special. Sense Technology is one of them. This technology provides users with a better immersive experience as it provides haptic feedback [14] like shock-waves from explosions and the fluctuation of water. Moreover, VR2 has a more colorful 2000 x 2040 OLED panel compared to the last version, and its refresh rate reaches up to 120Hz, which provides users with a relatively high gaming experience [15].

1.3. Basic technologies

This part describes the very basic technologies used in XR products and is divided into two main parts, the hardware and the software.

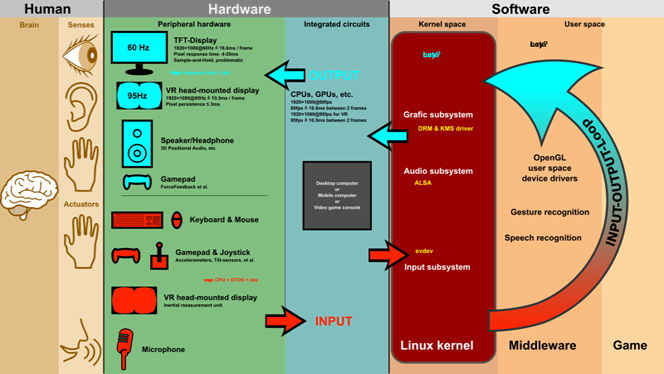

As shown in Figure 5, three parts referring to Humans, hardware and software contributing to the input and output loop of the whole process of XR products are represented. They both start with sensors like cameras or audio equipment to generate a 3D realistic world [17, 18] and then give feedback to users by using monitors and speakers. The following part refers to hardware and software in XR products.

1.3.1. Hardware

1.3.1.1. Inertial Measurement Unit (IMU)

IMU is used to capture movements of users’ bodies, heads and hands, which commonly consist of an accelerometer and a gyroscope [20].

An accelerometer measures the acceleration of an object by detecting displacement produced by the mass connected to the spring, As the mass moves, it will cause stretching or compression of the piezoelectric or resonant element, and will further cause an electrical charge or change in frequency. Accelerometer has five different types, they are piezoresistive type, servo type, piezoelectric type, capacitive type and frequency-change type. Different types have different characteristics and are used in different situations [21].

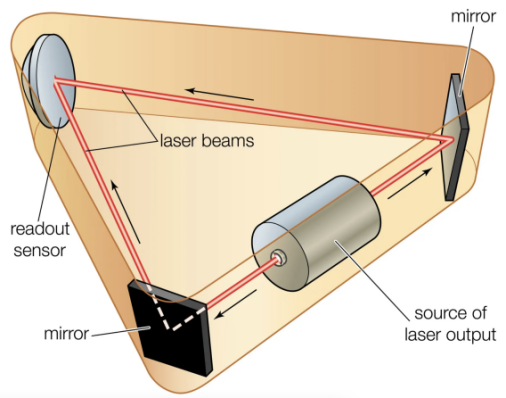

As shown in Figure 6, A gyroscope is used to measure the orientation of an object by detecting its deviation. In Figure 6, the working principle of the ring laser gyroscope is represented. The ring laser gyroscope uses Sagnac effect while the fibre-optic gyroscope uses thin fibre which is tightly wrapped around the small spool to route lights [22].

1.3.1.2. Optical facilities

There are various imaging systems used in XR headsets, but the following talks about only two of them, the off-axis reflection and lens.

Off-axis reflection is a kind of technology that allows lights to focus on the point of focus of the parabolic mirror, making the view of reality more real to the real world and enhancing the quality of field-of-view [3].

The use of the Fresnel lens dramatically decreased the weight of the headsets. Fresnel lens discards glasses in the middle of the lenses as they do not contribute to the focus of lights, so in this way, glasses used are lighter. Moreover, the use of a curved Fresnel lens increased the sharpness while enlarging the Field-of-View (FOV) [23], letting users feel more comfortable while using XR products.

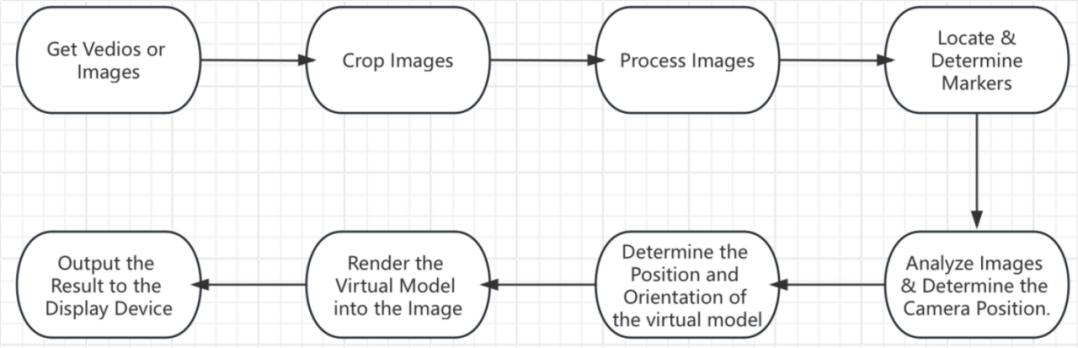

1.3.2. Software

As shown in Figure 7, the working principle of XR technology processing is represented. The following parts interpret some key parts of this process. The first step to create a virtual world is using the technology of 3D modeling, which means building up a 3D digital model of any object on the computer [24]. By using applications like 3DMax, Maya and Blender or using equipment to scan objects in the real world are methods of 3D modeling. Then using the technology called Simultaneous Localization and Mapping (SLAM) to ensure real-time positioning. In essence, AR products are more sophisticated. Despite using technologies mentioned above, AR products need to take the combination of the real world and the virtual one into consideration. For example, virtual objects and the real world need to be placed with the same light intensity and direction. ARCore (one of the mainstream AR software development kits) is the solution which analyzes pictures taken by cameras and then gets information about the light [3].

1.4. Application

XR products are used in many areas like education, entertainment, healthcare and so on. The following part talks about the different uses of XR products in different areas.

1.4.1. Entertainment

1.4.1.1. Games

As the Figure 8 shows, Due to the real-time interaction offers a more immersive and interactive experience provided by XR games compared with traditional games, XR games have gained widespread popularity [26]. There are already lots of games made for adults these days, but a high demand in XR games for children and this industry is of low competition, which is friendly for startups [2].

1.4.1.2. Live concerts

As shown in Figure 9, Innovation in XR products makes it easier for people to ‘visit’ museums, ‘attend’ live events and ‘go to’ theme parks. Take live music concerts for example, fans have the ability to see digital elements like animations and holograms, which makes concerts more shocking and appealing [26]. Moreover, more and more music festivals like Coachella, Lollapalooza, Tomorrowland and Sziget Festival and top artists like Coldplay and Imagine Dragons embrace using XR [2].

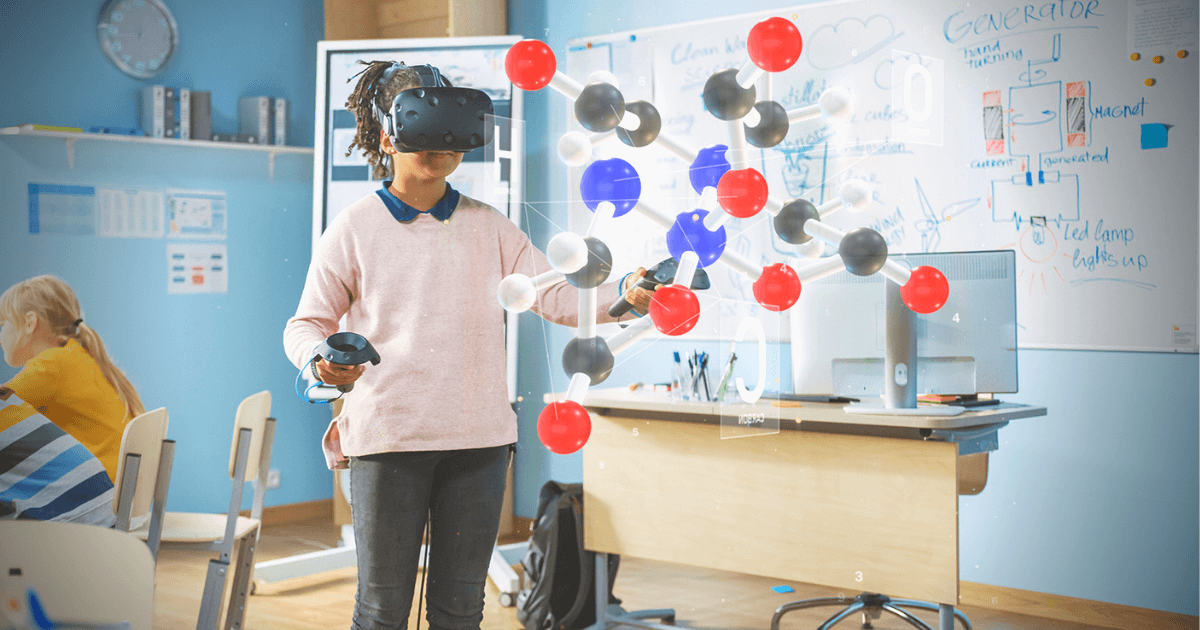

1.4.2. Education

As shown in Figure 10, XR products are changing the way of learning by giving students with more memorable approaches to acquire knowledge [29]. Take students who study science as an example, using XR products can make students see the structure of molecules or the motion of an object clearly and directly.

As shown in Figure 11, A dramatic rise in the use of XR products occurs in Architecture, Engineering, and Construction (AEC) industry [30]. This is because XR products provide safe training to workers.

1.5. Summary

By researching literature and the existing XR products, the article finds that the core factors that prevent the prevalence of those products is the lack of immersion, and makes users on getting into the virtual world. Here are some reasons.

1.5.1. High latency

Real-time tracking of the users’ head movement is apparently important [31]. High latency together with disorientation in XR products are commonly complained about by users [20], which may cause virtual reality sickness, a sort of motion sickness. A recommended latency is 20ms, said by John Carmack, but for most of the products, they have a latency time over 20ms, which is above the recommended one, and so they do not improve the experience of immersion to users. Meta Quest 3, to be mentioned, has an average latency time of 31ms [32].

1.5.2. Lack of real interaction

An immersive experience lets users be able to interact realistically with objects in the virtual world, for example, making it possible for users to reach out and grab objects [31]. Therefore, an accurate tracking system is needed to ensure the precise capture of the movements of hands or heads to make sure users see themselves grabbing the objects which they intend to. Mark Gurman criticized that the tracking system of Meta Quest 3 is not accurate whether in headset or in hand controller, including face, eye and hand tracking [33].

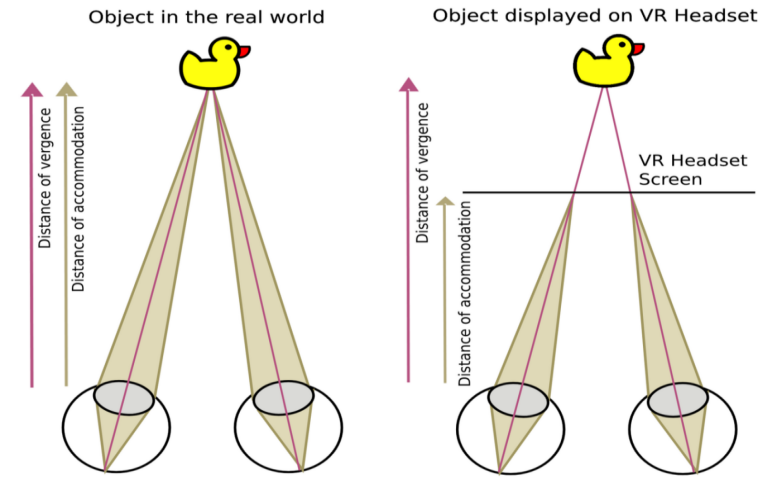

1.5.3. Vergence Accommodation Conflict (VAC)

As shown in Figure 12, VAC is a visual phenomenon which occurs when the brain receives the mismatching problem between vergence and accommodation of the eye, as shown in Figure 12, and this kind of visual problem commonly occurs in XR headsets. In reality, people change the shape of their crystalline lenses to focus lights from everywhere through accommodation. In XR headsets, all lights are generated by a light source. At this point, as eyes converge to see virtual objects, the shape of lenses (the glass one in headsets) or the distance of virtual objects displayed must constantly adjust to focus the fixed-distance light emitted from the display to reduce the cause of different degrees of distance of mismatch, which often causes eye strain or even something worse - orientation issues [31]. Karl Guttag of the KGOnTech shared his experience in the article, “In the longer session with the Microsoft HoloLens2, I felt mild to moderate pain in my eyes” [34].

1.5.4. Lack of 3D spatial audio

Acoustic experience has to coordinate with the visual experience perceived by users, which means the perceived sound location need to be coincide with the position viewed by the user. The challenge is different people have different experiences of the acoustic signal and therefore reducing the feelings of immersion [31].

After comparing some products and technologies, this article finds that Apple Vision Pro has an advantage in providing the experience of immersion for its users. In the discussion part, this article will take Apple Vision Pro as an example to analyse its structure and features and then discuss how it enhances users’ experience.

2. Discussion

Different from the Literature Review part, the Discussion part provides a case study of only Apple Vision Pro. It shows Apple Vision Pro’s structure and then introduces its unique and innovative hardware technologies that help to improve users’ immersive experience. Those technologies include Micro OLED, dual chips and so on.

2.1. Introduction to Apple Vision Pro (AVP)

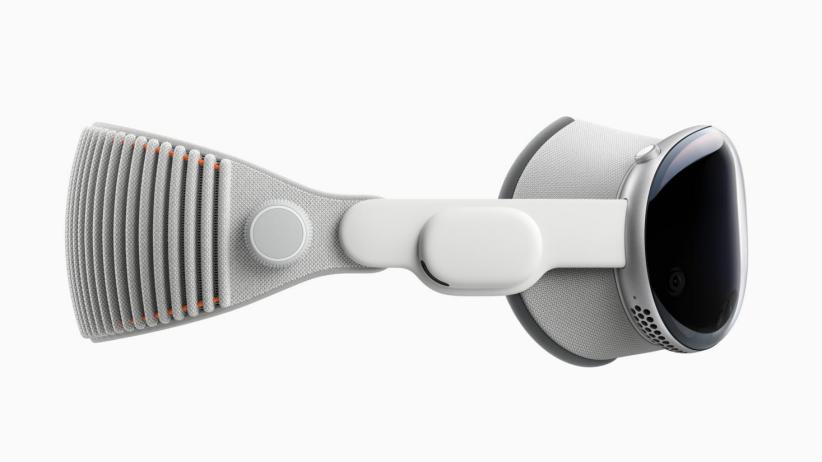

As shown in Figure 13, AVP was released on February 2, 2024, powered by visionOS2 and weighs 600 to 650 grams [36]. It costs $3,499 for basic configuration. Apple sees AVP as its first “spacial” computer [37], showing how immersive the experience it can offer to the users. Unlike the products mentioned above, AVP does not only focus on the aspects of entertainment, it also focuses on practicality, such as online-communication and online-working [38]. Taking Photos (an application) as an example, pictures shot before can become alive and vivid when users watch them later, which recalls users’ favorite memories with Spatial Audio [37]. The word “computer” shows that AVP can do what a laptop does, but it can do more. Taking FaceTime as an example, receivers can see life-sized callers with their hand movements in front of their eyes [37]. The Spatial Audio together with this makes a meeting more natural [39]. Figure 14 shows a woman wearing an AVP is having a meeting with another three people.

Besides, users can customize their AVP’s wallpaper as shown in Figure 15 and immerse themselves into beautiful landscapes like national parks or even the surface of the moon [36].

AVP has an impressive point is that users can use a crown to switch between VR and AR. Moreover, there is a button on the top of it which is used to take photos [39].

AVP is widely praised by people. Mark Zuckerberg, CEO of Meta praised for the display resolution and eye tracking of AVP [40]. Samuel Axon of Ars Technica praised the intuitiveness of the users’ interface [41]. Moreover, Nilay Patel of The Verge praised that AVP has a more premium build quality compared to others [42].

2.2. Structure of AVP

AVP integrates high resolution displays, low latency chips, loads of sensitive sensors, 3D spatial audio and motorized adjustment Interpupillary Distance (IPD) and it allows Optic ID.

2.2.1. Display

In Figure 16, AVP has two 100 Hz Micro OLED displays inside, as shown in Figure 16, and an additional OLED display in front of the display, reaching a resolution of 3660x3200 pixels [43, 44]. Apple created the Micro OLED displays with Sony (or maybe TSMC), and each of them is approximately 1 inch in size, reaching the Pixels Per Inch (PPI) of approximately 4808, which is higher than most of the current products [45].

2.2.2. Chips

As shown in Figure 17, M2 and R1 contribute to ensure the best working of AVP. As shown in Figure 17 [47], M2 chip locates on the left while R1 locates on the right. For M2, it is an integration of an 8 core CPU with 4 performance cores and 4 efficiency cores, a 10 core GPU and a 16 core Neural Engine. M2 equips 16GB unified memory. For R1, it achieves 256GB/s memory bandwidth and only has 12ms latency time [46].

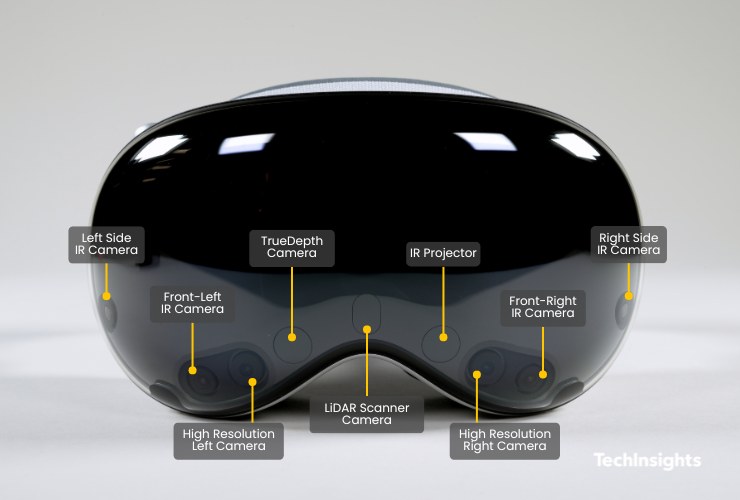

2.2.3. Sensors

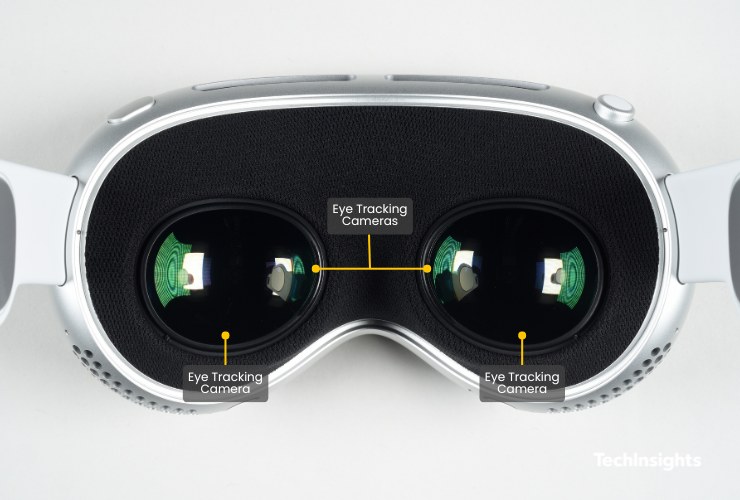

As shown in Figure 18, AVP has two main cameras, six face-tracking cameras, four eye‑tracking cameras (as shown in Figure 19), a TrueDepth camera, a LiDAR scanner camera, four IMUs, a flicker sensor and an ambient light sensor [46]. Those Infra-Red (IR) cameras and projector shown in Figure 18 are tracking cameras used to detect users’ movements of hands or fingers [48]. Figure 19 shows inner eye-tracking camera.

2.2.4. Camera

AVP has the ability to take photos like an iPhone. AVP has a stereoscopic 3D main camera system and enables spatial photo and video capture. The camera has an 18 mm, ƒ/2.00 aperture and has a resolution of 6.5 stereo megapixels [46].

2.2.5. Audio system

AVP uses 3D spatial audio to enhance immersion. It has a six‑mic array with directional beamforming, allowing personalized Spatial Audio and audio ray tracing. Moreover, when users use their AirPods, AVP supports H2‑to‑H2 ultra‑low‑latency connection to AirPods Pro 2 with USB‑C and AirPods 4. In AVP, users can play audio in forms of AAC, MP3, Apple Lossless, FLAC, Dolby Digital, Dolby Digital Plus, and Dolby Atmos [46].

2.2.6. Interpupillary Distance (IPD)

IPD is the distance between the centers of pupils, measured in millimeters [49]. AVP’s IPD range starts from 51mm to 75 mm, which means that it is adjustable by moving the displays in front of users’ eyes [46, 47].

2.2.7. Optic ID

Optic ID is a new biometric authentication technology introduced by Apple with the Apple Vision Pro. Just like the invention of Touch ID and Face ID, Optic ID revolutionizes authentication using iris recognition. Hence, other devices like Microsoft HoloLens2 does not have this technology. To ensure security safeguards, the probability that a person (randomly chosen from the population) could unlock Apple Vision Pro is less than 1 in 1,000,000. To make it safer, Optic ID allows an up to five failed attempts before the password is required [50].

2.3. Technologies enhancing immersion

Literature Review part indicated some limitations of XR headsets, including high-latency, lack of real interaction, Vergence Accommodation Conflict (VAC) and lack of 3D spatial audio, that prevents headsets from providing an immersive experience to the users. This article analyses core technologies and compares them with other headsets in terms of the use of those technologies.

2.3.1. Micro OLED display & fully laminated triple pancake lenses

AVP’s display together with its lenses solves the problem of VAC. Each of the Micro OLED display emits its own light, which then can be easily adjusted by lenses, which guide the light to the right projection place. Therefore, Micro OLED displays and fully laminated triple-lens solve the problem of VAC [51].

2.3.1.1. Micro OLED

Due to the integration of micro screen displays, Micro OLED achieves a high resolution and therefore represents vivid pictures. So it enhances immersion.

Micro OLED is a micro-display device that utilizes a single-crystal silicon semiconductor substrate. It integrates tens of millions of transistors from a Complementary Metal-Oxide-Semiconductor (CMOS) driver circuit. OLED organic materials are evaporated on the CMOS driving circuit to conduct light-emitting diodes, which perfectly enables the realization of high-resolution tiny-sized micro-display applications.

Micro OLED can precisely control individual pixels, and the module shows rapid response time, which can effectively reduce color retention phenomenon when the display scene changes rapidly. What’s more, due to the absence of a backlight, Micro OLED devices are thinner, lighter, and more power-efficient. They can achieve a "totally black" color effect, reaching a higher display contrast ratio. By scientific calculation, the screen resolution that meets the visual needs of human eyes is 7200×8100 pixels per eye [52].

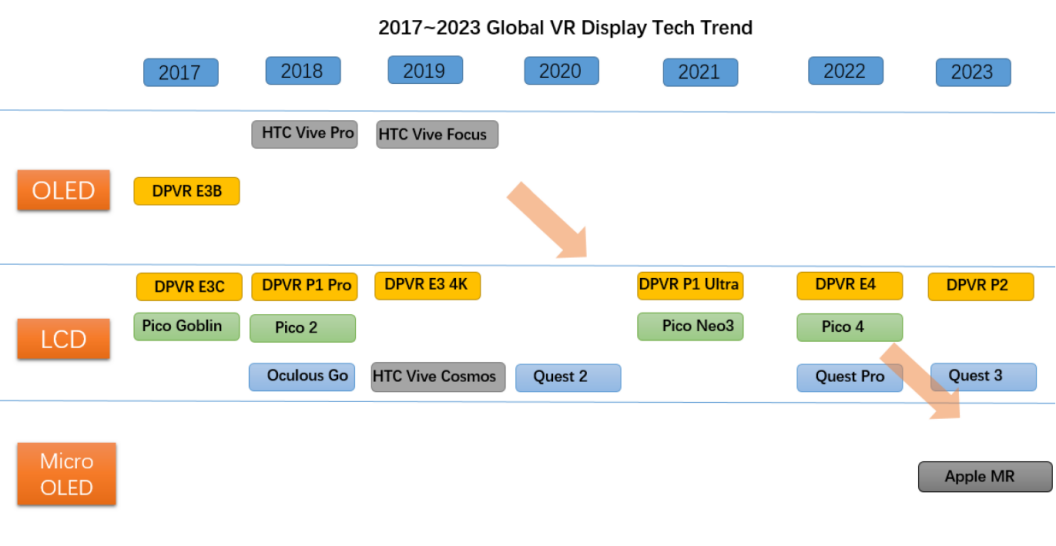

The following figure, Figure 20, shows different types of screens used by different XR products between 2017 and 2023, from OLED to LCD and finally to Micro OLED display. From it, Meta Quest 3 utilizes an LCD screen while AVP (Apple MR) uses Micro OLED in 2023.

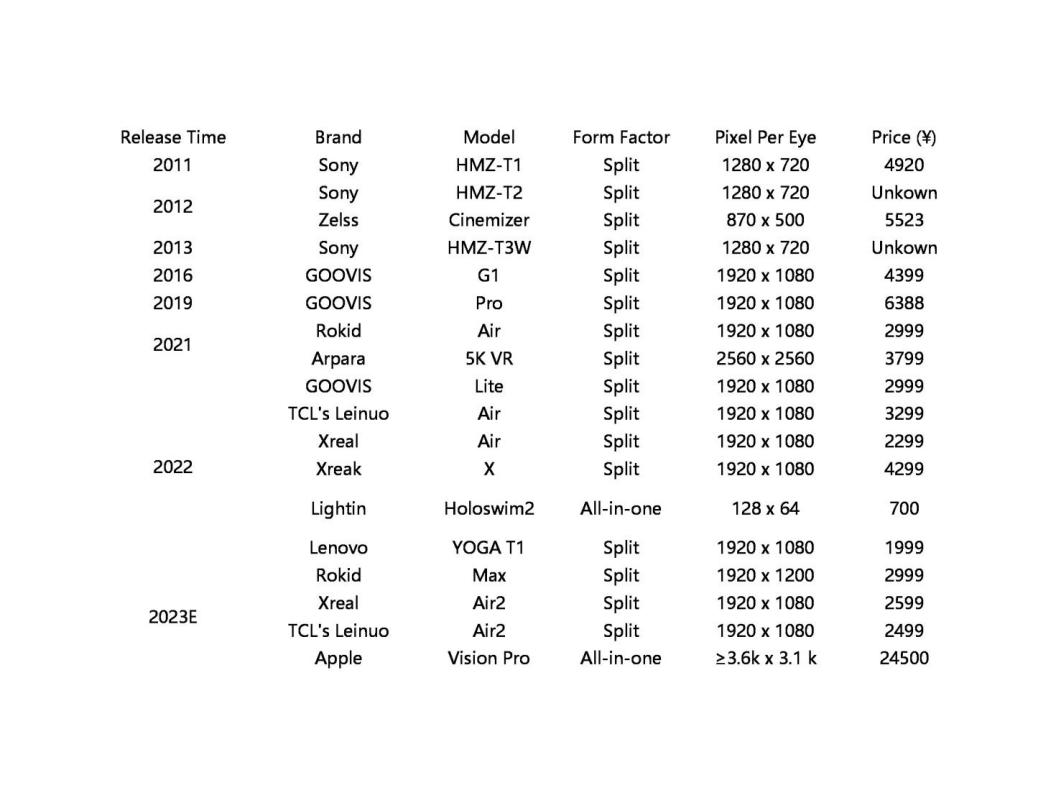

Figure 21 compares resolution of different XR products (released from 2011 to 2023) that use Micro OLED. It is concluded that AVP has the highest resolution, reaching the figure of 3660x3200 pixels per eye. By contrast, other XR products only has about 2000 x 1000 pixels per eye.

As shown in Figure 21, it is Apple that made a huge breakthrough in reaching a high resolution, more and more XR products in the market use Micro OLED display. By xueqiu, Apple is leading the use of Micro OLED display and there will be more and more high-end products equipped with Micro OLED display [54].

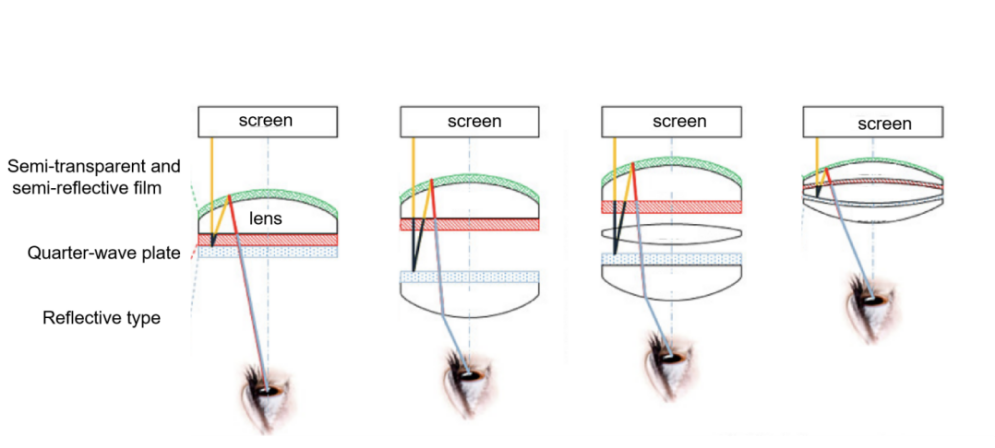

2.3.1.2. Fully laminated triple pancake lenses

Fully laminated triple pancake lenses lower the weight of AVP headset and enhance quality of the images.

To reduce weight of the headsets, manufacturers, therefore, need to reduce the thickness of the optics as well as the distance between optics and display. Compared with less than 1 mm distance between the optics and the display reached by pancake lenses, that of the Fresnel lenses, as mentioned before, is more than 50 mm.

Fully laminated triple pancake lenses further decrease the thickness of the optic modules and enhance the quality of the images. This is because AVP’s fully laminated triple pancake lenses use higher refractive index and higher transparency material and each lens has a relatively low reflect rate which improves the light transmission efficiency and reduces stray light and ghosting [55]. Moreover, fully laminated triple pancake lenses fold the light path three times within the cavity, which attains a shorter focal length, leading to a dramatic reduction in volume while delivering exceptional image quality [56]. Therefore, this improves the quality of the images.

Figure 22 shows the evolution of pancake technology. From the very left to the very right of the picture, they are single pancake lens, dual pancake lenses, triple pancake lenses and then the fully laminated triple pancake lenses used in AVP [54].

As mentioned by Wellsenn XR, “optic modules change the path way of lights while enlarging the size of the images” [55]. Therefore the lenses adjust to focus the fixed-distance light emitted from the display, reducing the effect of VAC.

As shown in Table 1, AVP is the first to adopt the use of fully laminated triple pancake lenses and has the lightest weight and modules’ thickness, which makes AVP the most advanced in today’s world. By comparison, other XR products like Meta Quest 3 as mentioned before only uses dual pancake lenses.

|

Product |

Time |

Pancake type |

Description |

|

PICO 4 |

2022 |

single pancake lens |

lower cost compared with multiple pancake lenses |

|

Meta Quest 3 |

2023 |

dual pancake lenses |

cost more but with a higher visual effect |

|

Huawei VR Glass |

2019 |

triple pancake lenses |

very light of only150g weight and 23.55mm thickness |

|

Apple Vision Pro |

2024 |

fully laminated triple pancake lenses |

the lightest of 19g weight and 18mm thickness |

2.3.2. Brand New Design of Chips

The use of the combination of M2 and R1increases computing power and achieves faster processing time. Therefore, it solves the problem of high latency time, achieving a higher level of immersion.

While M2 focuses on 3D rendering, core computing, AI neural networks, video decoding, display support and so on, R1 serves as a co-processor, which provides a powerful computing support [38]. A co-processor means that R1 enables real-time processing which can overlay the reality onto the operating system and video of software that runs on the M2 processor. R1, as a new generation invented by Apple has its traits. Firstly, R1 is able to handle precise inputs from the device’s cameras, sensors, and microphones [57]. By Low-Latency Wide (LLW) Input/Output memory of Apple R1, AVP can integrate high-resolution displays and sensors and can achieve real-time control on large video data [58, 59]. Secondly, the R1 chip sends real-time images to the display within 12 milliseconds which lowers the delay time. Therefore, this makes users feel less uncomfortable and dizzy when they browse the images of the real world.

According to TechInsights [44], Apple R1 sensor processing unit is the heart of AVP and the core of R1 is SK Hynix H5EA1A56MWA (made by SK Hynix Inc., a chip supplier from South Korea) which provides high memory density and power efficiency to support the real-time computing needs of the AVP. Its package is manufactured by TSMC using the Integrated Fan-Out Multi-Chip technology. This technology enables 1961 solder pads, making it dense and efficient [58].

Table 2 ranks latency time from the smallest to the highest. So from it, AVP has the smallest latency time (12ms). By contrast, other XR products usually has a latency time around 20ms. To be mentioned, Meta Quest 3 has a latency time of 31ms, which is the highest [32].

|

Product |

Latency Time |

|

Apple Vision Pro |

12 ms |

|

PlayStation VR1 |

18 ms |

|

PICO 4 Ultra |

20 ms |

|

Oculus Quest 2 |

15 - 20 ms |

|

Meta Quest 3 |

31 ms |

2.3.3. Sensors - tracking systems & distance calculation

Eye-tracking and hand-tracking systems in AVP enhance the real interaction between the users and the virtual world. Also, bi-directional perspective, achieved by the sensors, makes interaction between humans in the real world more natural. The LiDAR scanner cameras help to increase the effect of the experience of 3D spatial audio.

2.3.3.1. Tracking systems

AVP has an advanced tracking system which allows precise capture of hands’ and eyes’ movement, contributing to the generation of real-time eyes’ images when it comes to socializing and enhancing interaction between the virtual world.

Near-Infrared (NIR) Camera, made by SONY, enhances eye-tracking and gesture recognition. TrueDepth Camera, made by STMicroelectronics, can perform facial recognition and conduct depth sensing [44]. In general, there are six cameras detecting users’ hand movements, so even a small finger movement can be captured. There are four NIR cameras inside the headset that capture eye movements, so when users look at icons, they are selected [48]. Due to the four NIR cameras, AVP enables bi-directional perspective.

Unlike former products which only allow users to see what’s in front of their eyes, which is a term called forward perspective, AVP allows eyesight (named Apple itself). Eyesight means backward perspective. Interior cameras use infra-red rays to scan users’ eyes to collect the position of their pupils and the reflected infrared rays are used to generate images of the users’ eyes. To be mentioned, there is a step before presenting the final image of the users’ eyes to the viewers. This step includes a technology called Naked-eye 3D raster (by using Lenticular Lens Raster), which means achieving a sense of depth without wearing 3D glasses. The core working principle of Lenticular Lens Raster is that the raster divides images into different viewing angles, making receivers see different views of the image to create, therefore creating a sense of depth. For example, after scanning users’ eyes, AVP models eyes’ images from different viewing angles. Then it slices and recombines images that were collected from different viewing angles. Last, the Lenticular Lens Raster projects different slices of the images from different viewing angles to the display screen, letting the people in front of the wearers see their eyes from different views, making the conversation more natural when it comes to socialization [54].

2.3.3.2. LiDAR scanner camera

LiDAR scanner camera contributes to see the symmetry of the wearers’ head to adjust volume of the speakers to let them experience 3D Spacial Audio. Also it helps to alleviate visual illusion by calculating how close you are to the objects in the reality.

The LiDAR scanner camera is assembled with lenses and filters and is used to calculate distances. To be more specific, it uses the technology called Direct Time of Flight to achieve this. Through emitting a laser beam which is then detected by a Single Photon Avalanche Diode (SPAD) sensor, the distance can be calculated by multiplying the light’s speed and time taken for the it to return. Key components of LiDAR scanner camera include Onsemi's Serial EEPROM, Texas Instruments' VCSEL array driver and Sony's SPAD array [44]. According to Graham Barlow the measure of distance is important as Apple Vision Pro makes icons floating around [48], so it needs to know how close you are to walls and furniture to alleviate visual illusion like displaying items in an inappropriate place to enhance the experience of immersion. As mentioned before, LiDAR scanner camera helps to increase the effect of the experience of 3D spatial audio. A wearer can experience an imbalanced volume of sounds if he or she has asymmetric facial features like ears standing at different heights. LiDAR scanner cameras calculate how close it is to the user’s face and then send signals to processors to see whether the user’s face is symmetrical or not. If it is, AVP’s speakers compensate for volume to let the user be exposed to a 3D spatial audio environment [60].

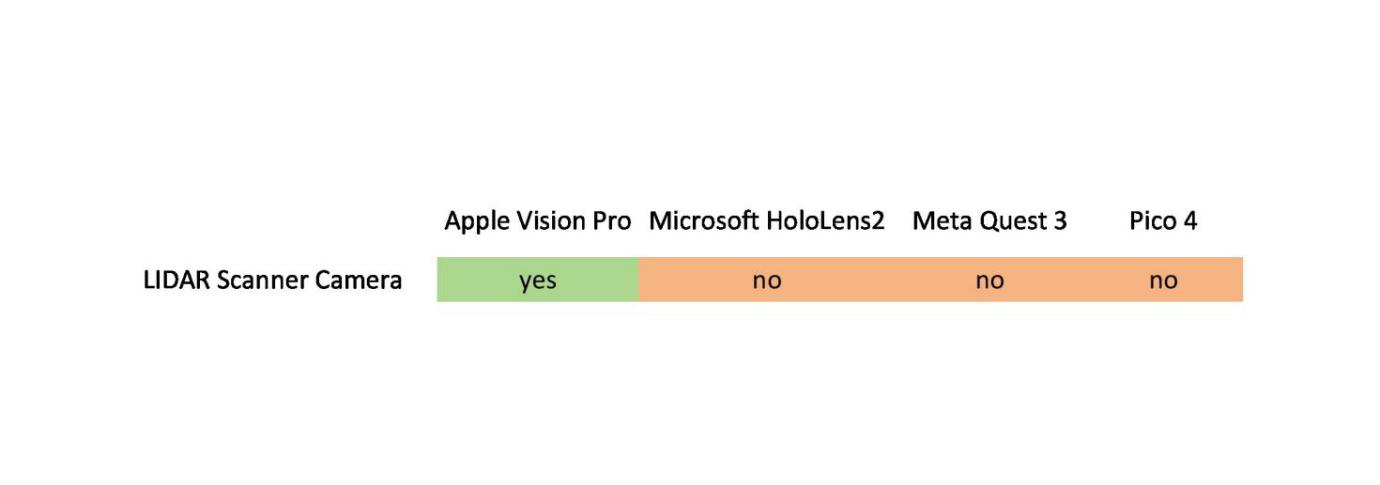

As shown in Figure 23, only AVP has LiDAR Scanner Camera, which makes it the second to none.

2.4. Counter arguments

Although AVP has a medley of breakthroughs, disadvantages exist. The following talks about some of the demerits.

AVP is used together with an external battery, which means users have to bring the battery with them whenever they go, and makes users “feel like an anchor into the past”, Mark Spoonauer proposed [62].

Moreover, AVP has many delicate and fragile components in headset, therefore it is not favored in harsh environments like construction sites. As a result, those workers cannot benefit from the development of the technology [63].

Besides, the brand and those emerging technologies make the price of AVP very high so put it beyond the use in fields like construction sites [63].

Last, Nilay Patel of The Verge said that, "so much technology in this thing that feels like magic when it works and frustrates you completely when it doesn't" [42]. Also, some big apps like Netflix and YouTube are missing and AVP is heavier than others like Meta Quest 3. Hence, users may feel uncomfortable after wearing it for 30 minutes [62]. Moreover, the Lenticular lens used to split the images’ of the users’ eyes disperse brightness, making the images dimmer of a low resolution [54].

However, those demerits mentioned above are not core problems. For example, the external battery and not sufficient applicants would not affect users’ immersive experience badly.

3. Conclusion

In the title, this article asks a question: How Does Apple Vision Pro Increase Immersive Experience? By analyzing factors that prevent the reach of immersive experience in the Literature Review part, this article suggests some difficulties that prevent the reach of immersion, including high-latency, lack of real interaction, Vergence Accommodation Conflict (VAC), and lack of 3D spatial audio. In the Discussion part, by conducting a case study on Apple Vision Pro, this article finds the following innovative hardware technologies which improve immersion:

1. Micro OLED is featured by an integration of tons of tiny displays. The development of display evolved from OLED to LCD and finally to Micro OLED. Each of the generations has its own breakthrough. Micro OLED, as the leading technology in XR headsets’ displays, achieves a higher resolution. Therefore, it solves the problem of low quality of the images.

2. Fully illuminated triple-lens pancake is Apple’s innovation. It is featured by using material of a higher refractive index and higher transparency and achieves both a higher light transmission efficiency and lower weight, solving the problem of heaviness and the low quality of images. The lenses together with the display solve the problem of VAC.

3. Dual chips are featured by efficient real-time processing capabilities. Due to the specialization of M2 and R1, they achieve a higher level of 3D rendering, solving the problems of high latency time.

4. Mountains of sensors are featured by advanced equipment. They achieve precise hand and eye tracking and contribute to the generation of images of wearers’ eyes and accurate distance calculation. Those sensors not only improve the interaction between the virtual world and the real one, but they also enhance the interaction between people when the wearer communicates with others.

For the prospect of Apple Vision Pro, it has things to do. First of all, it is concerned to lower its price to let it be more prevalent. Although it now has much high-tech equipment and inserts new innovations, as time goes by, other competitors like Meta may also apply the use of Micro OLED to its products. Therefore, to remain competitive, besides innovation, appropriately lowering the price is crucial. Secondly, Apple Vision Pro can also improve the technology of Eyesight to increase its resolution and brightness. As mentioned before, the lenses used to split the images’ of the users’ eyes make the images dimmer because lenses disperse brightness. So Apple could find alternatives to solve this problem.

In the future, XR headsets will be widely used just like mobile phones nowadays, benefiting from hand operation and 3D interface. This will occur when XR headsets are light enough and are able to integrate all components to one [64].